The data quality module allows the definition and implementation of quality rules, integrating with the glossary of concepts at the definition level and the data catalog at the implementation level.

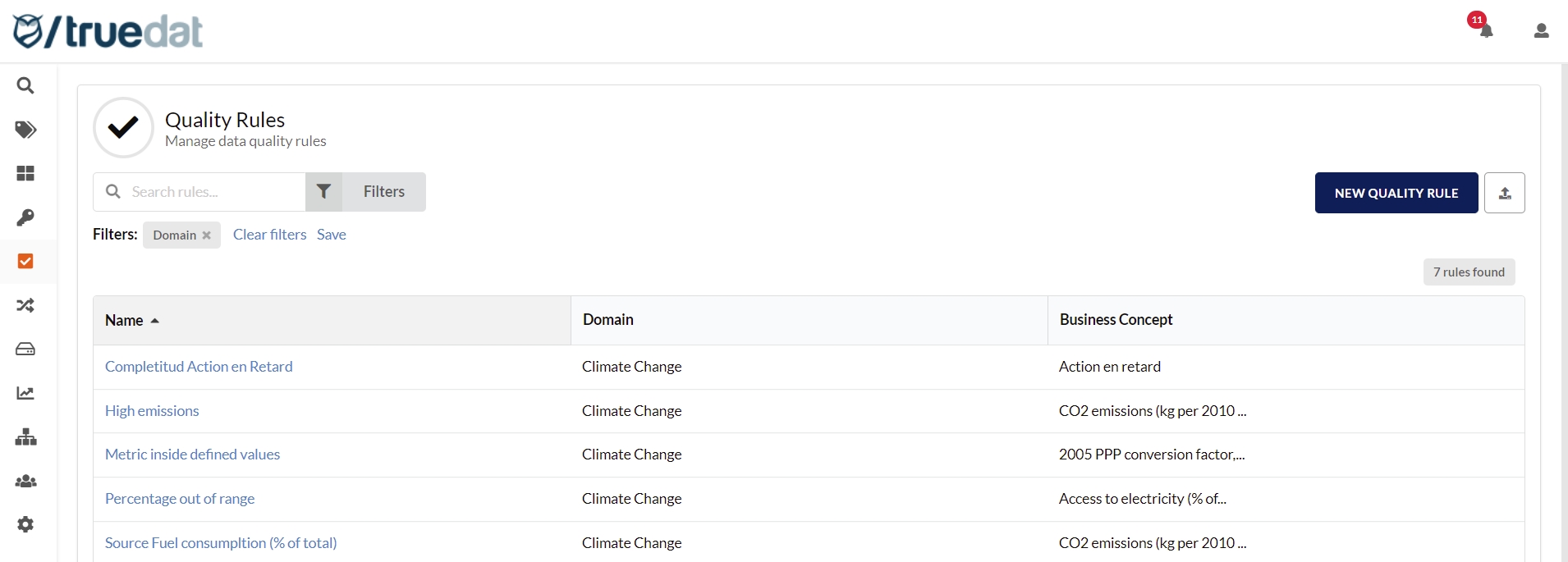

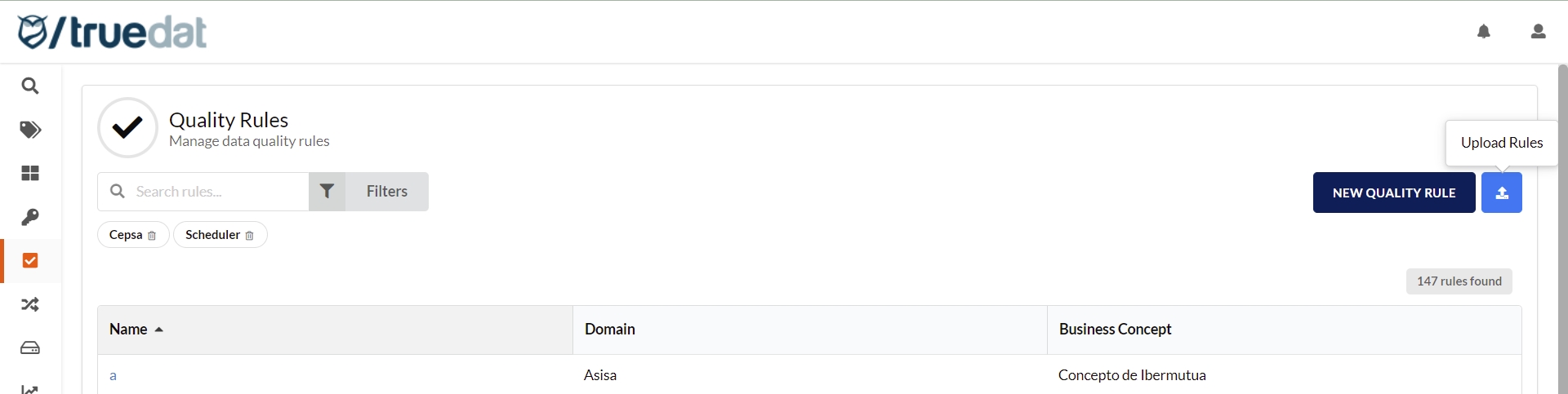

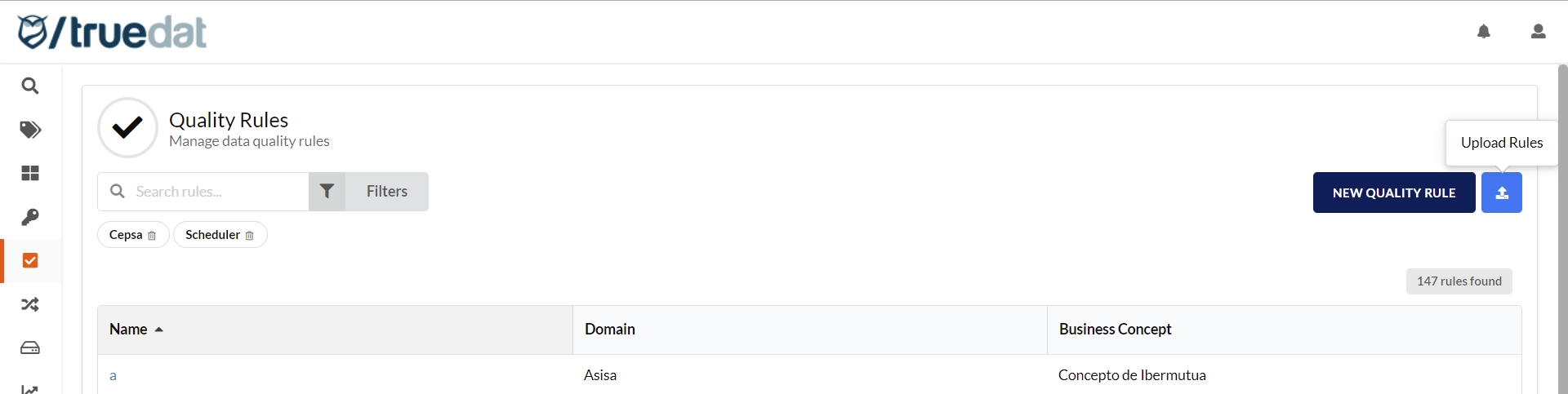

Accessing the quality module we will obtain a list of quality rules. By default in this list, you will see the concept the quality rule is linked to if any and the data domain it belongs to. The columns of this view can be customized in each installation and can display any field of the quality rule template (see Template management section for more information on templates).

You can filter the search by active / inactive rules, domain the rule belongs to, type of rule (template used) and additionally, any field from the templates that are defined as a list of fixed values.

Definition of quality from a business point of view. Here we must add why the use of the rule, how the quality of this data affects the business, description of how the rule should be implemented and any other data that is considered relevant from a business/functional point of view.

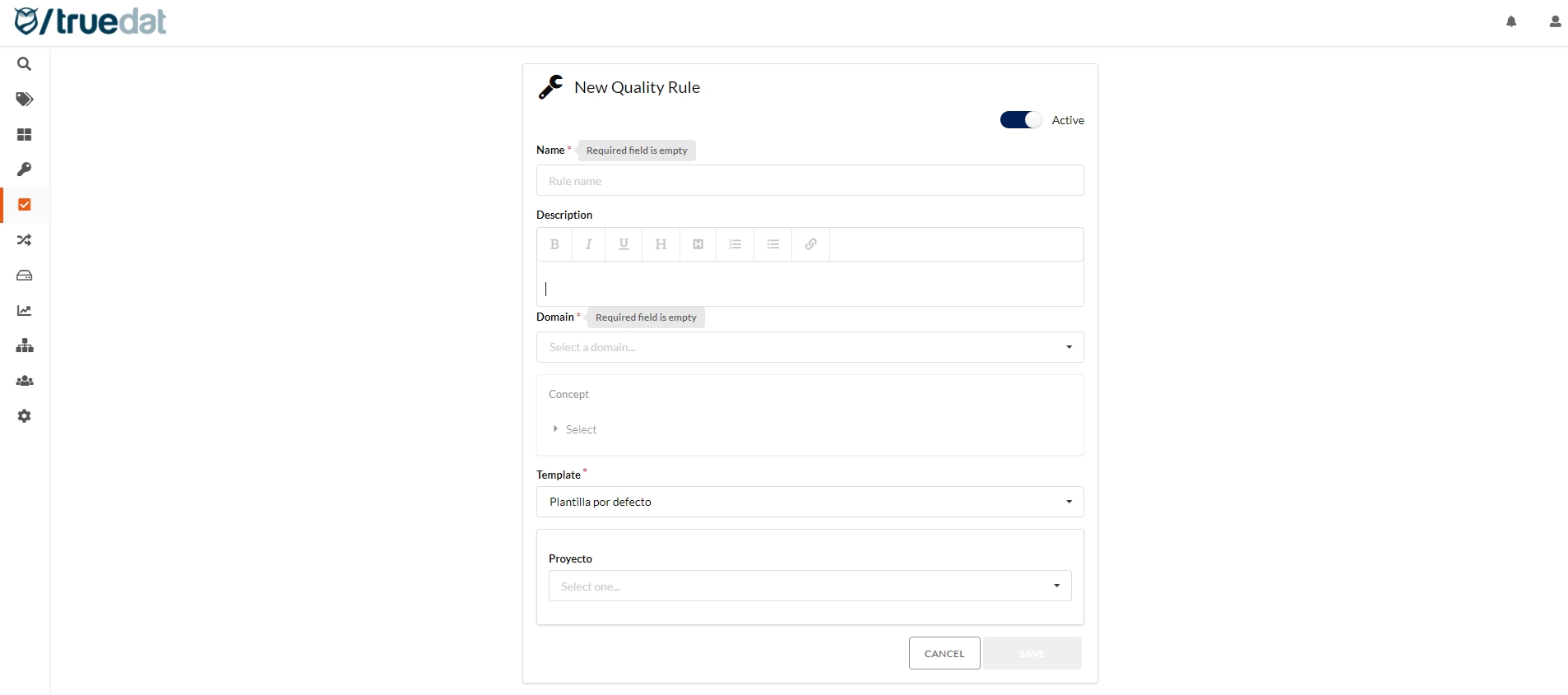

A quality rule consists of standard fields common to all installations and fields that may be customized in each installation using the template management feature.

Name: Name that identifies the validation and that will be displayed when this rule appears in a list. The objective of this field is to be able to quickly identify the validation in question.

Description: Detailed description of how this validation should be performed and what we want to obtain as a result. A rich text field will be available to enter this description.

Domain: Domain in which the rule will be stored. This will be important in order to define who has permission to alter the rule, create implementations, execute implementations.

Concept: Optionally you will be able to select a concept to which the rule is applied.

Active: If the rule is active, the quality implementations associated with it will be executed.

In each installation, the required fields can be configured through template management.

Quality rules can be defined in three different ways:

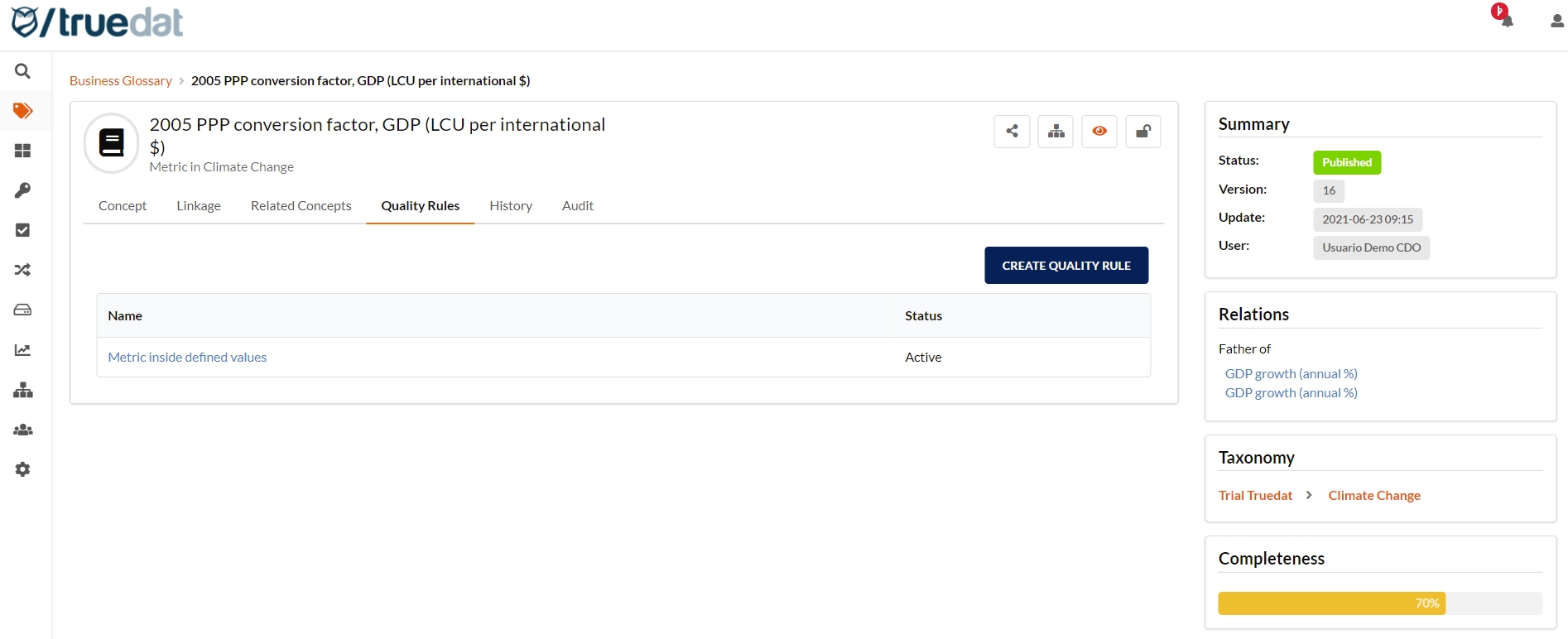

From a business concept. In this case, they will be a functional definition of validations to be performed on the given business concept to ensure its validity. To define these rules, a business concept will be accessed and you will be able to create the rule in the "Quality Rules" tab. Concept and domain will be automatically assigned to the rule.

From the Data Quality module: In this case you will need to enter the rule's domain and will be able to select a concept to be linked to the rule although this is optional. In the Data Quality Rules main screen, click on the 'New Quality Rule' button to access the form to enter the rule information.

Quality rules define the quality validations at functional level and the implementations define how these validations should be applied to our data. Take into account that the same rule can have several implementations since we may want to apply it to different data within our systems. Defining quality rules is not mandatory so you may create quality implementations that are not linked to quality rules.

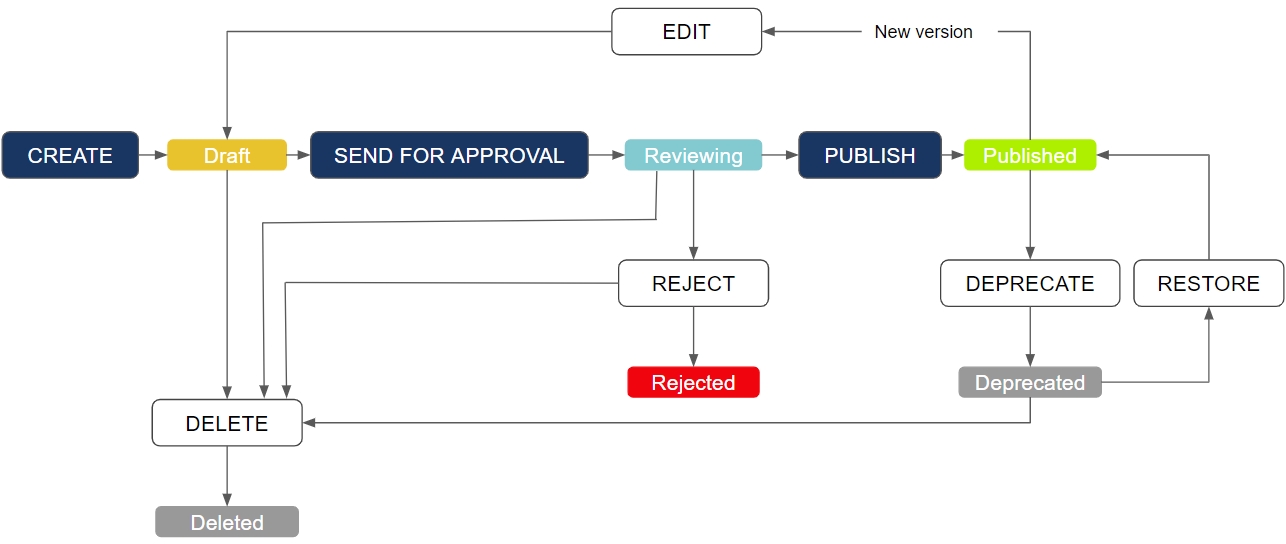

The creation of implementations can go through an approval process. Using the permissions linked to this functionlity, certain roles can be given permissions to create implementations in draft status and send them for approval and a different role can be given the permission to review implementations and approve or reject them.

If you do not want to implement this workflow, simply give the permissions to manage and to review implementations to the quality roles defined in your installation.

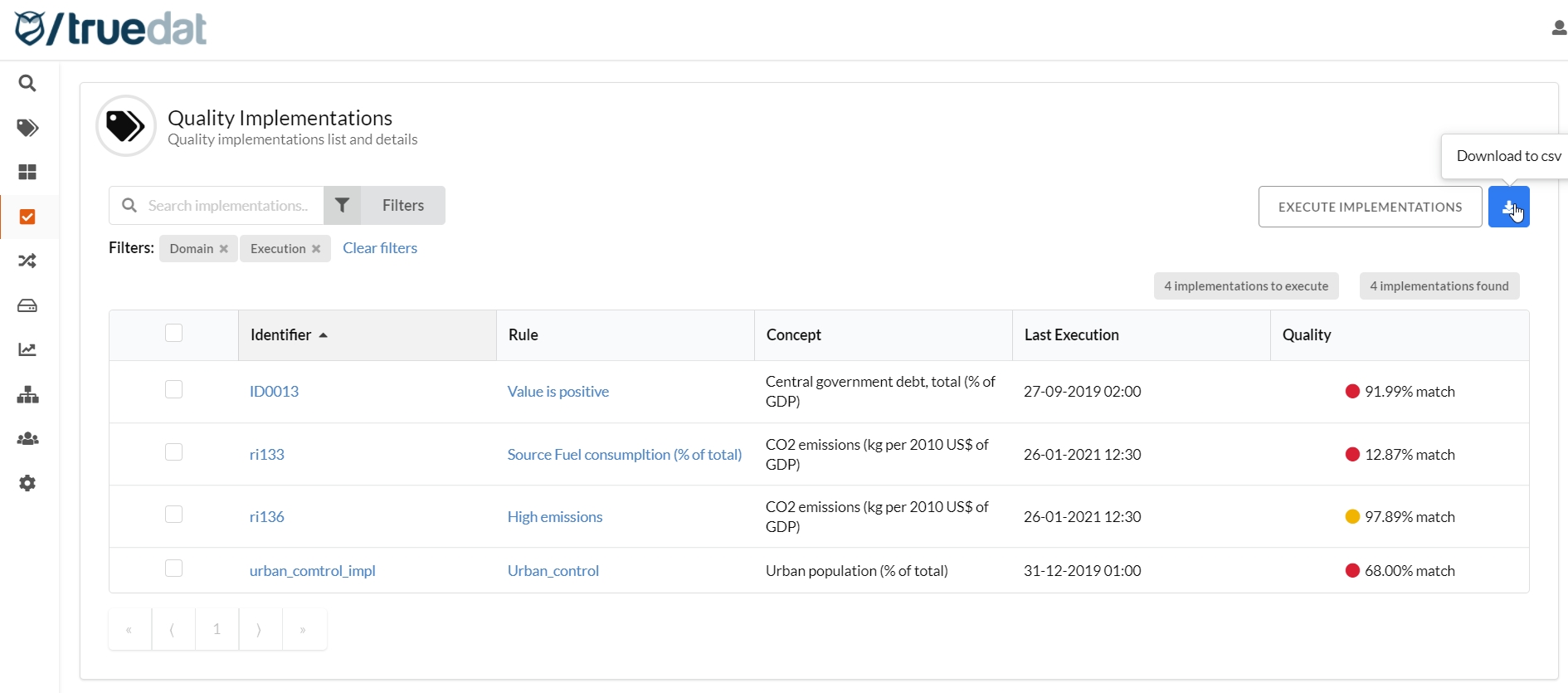

You will be able to access implementations using three different ways:

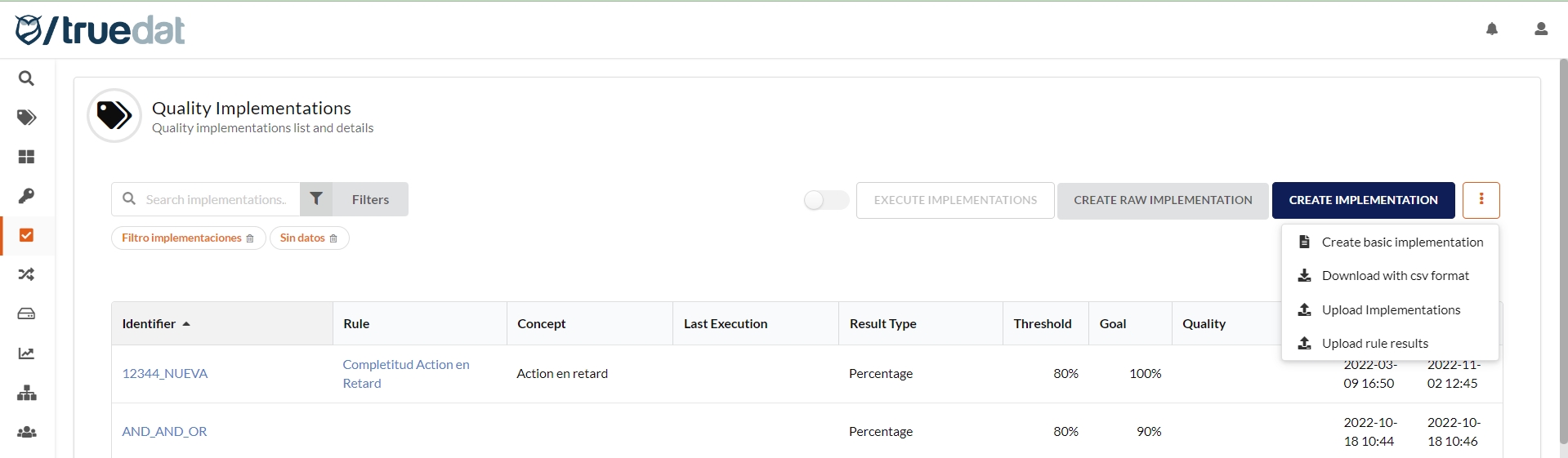

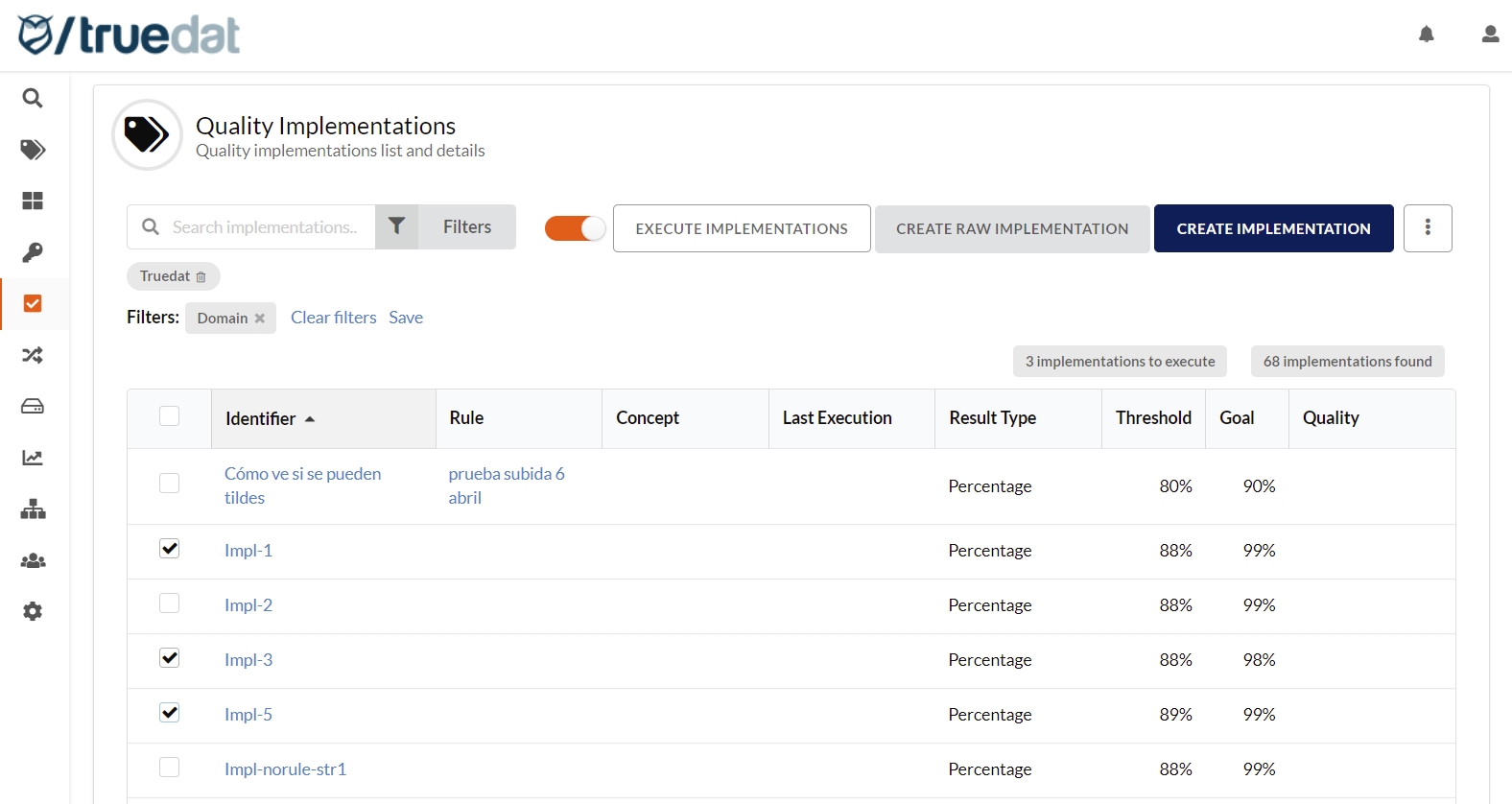

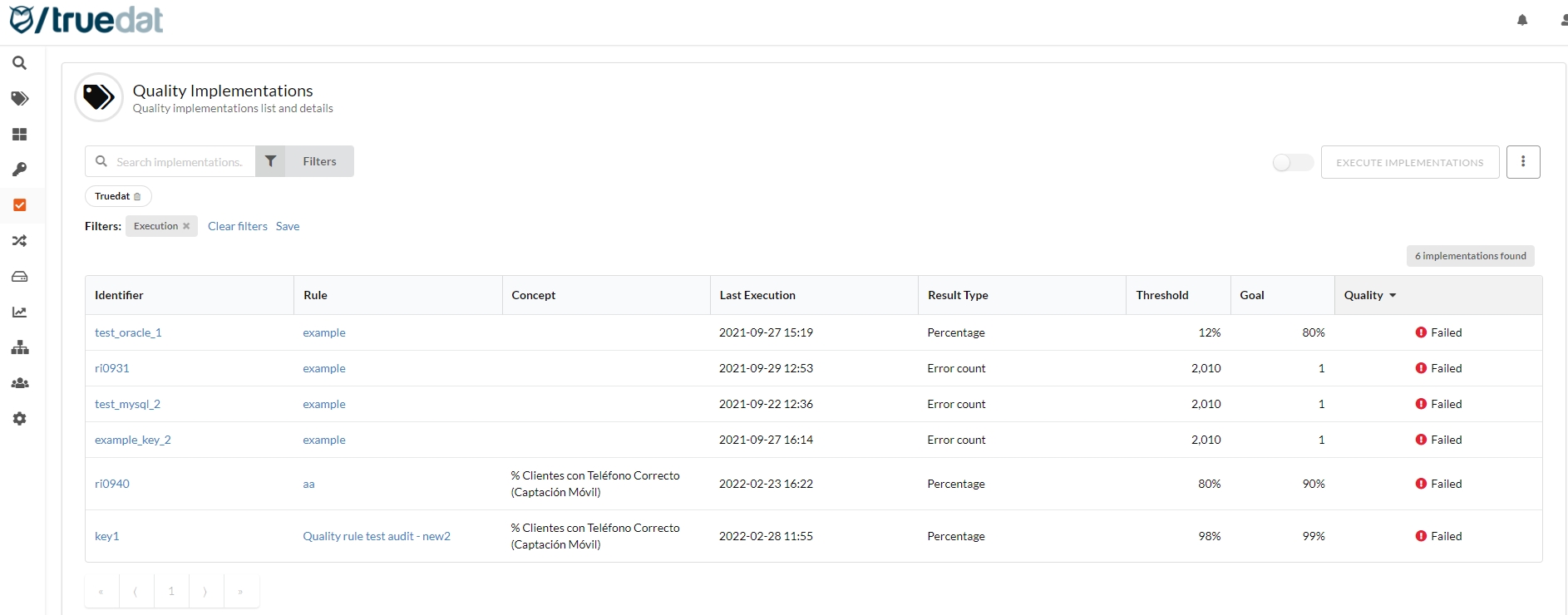

List of all implementations through the sidebar menu with download data, execute quality, search and filter capabilities.

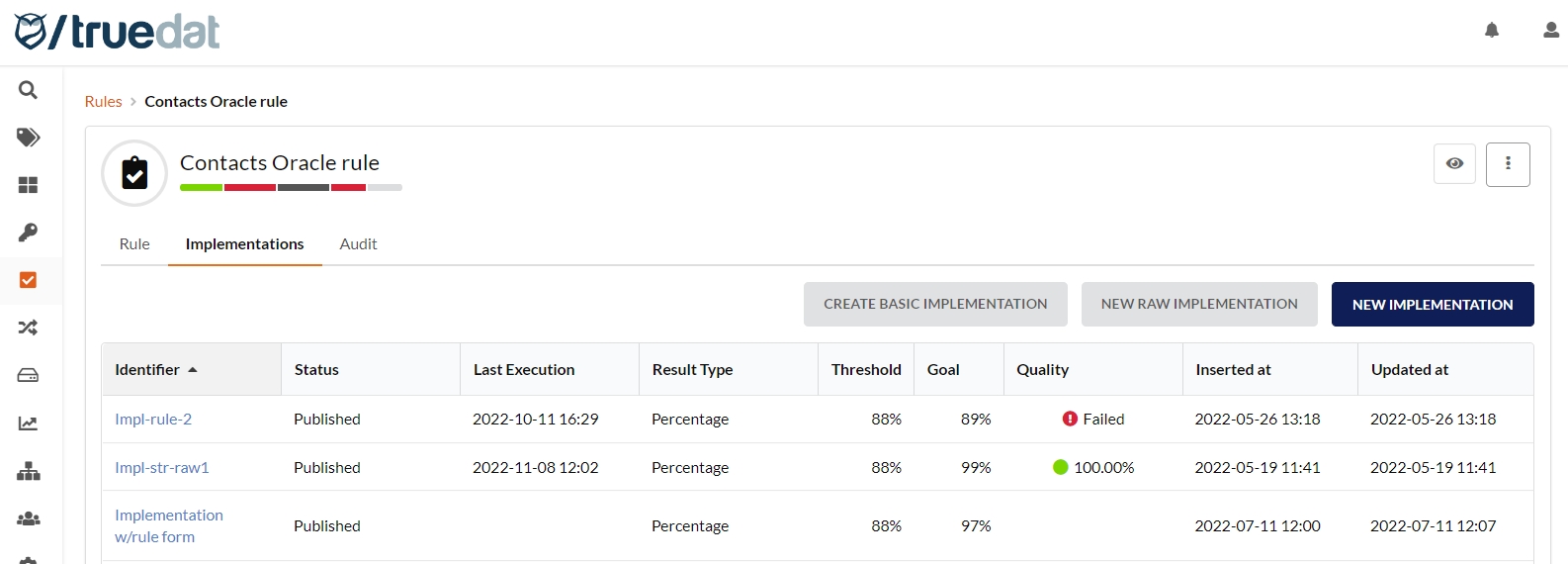

List of implementations related to a quality rule as a tab inside the quality rule.

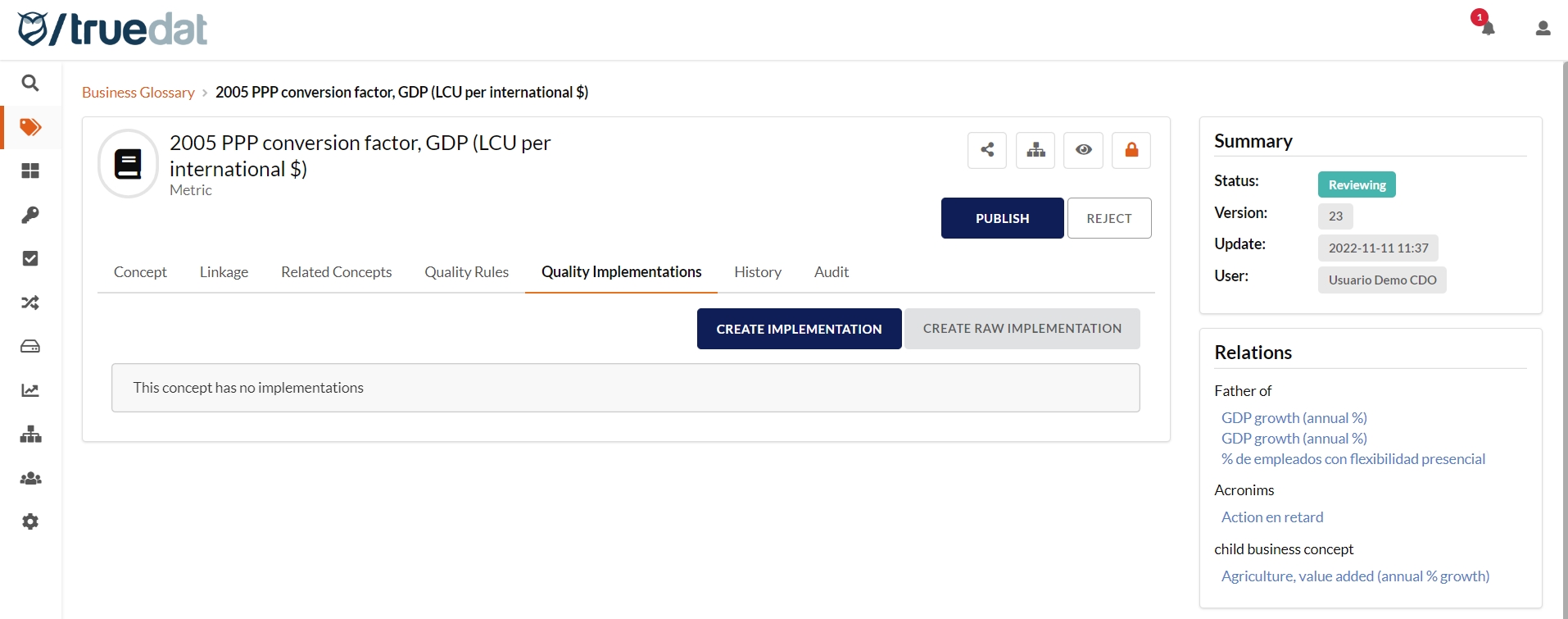

List of implementations linked directly to a business concept as a tab inside the business concept.

List of implementations for one data asset to be found in the data catalog.

Also, if your implementations go through an approval workflow, you can view those that are not published yet in the menu option Drafts under Data Quality.

In order to create a new implementation you can do it either linked to a quality rule or on its own.

Within the creation of new implementations, depending on the permissions of each user, two types of implementations can be registered: simple implementations that can be created following a multi-step form and raw/native implementations which are usually more complex and are set up by introducing SQL code. Depending on whether the implementation is linked to a rule or not, you will have to go to the Quality Rules menu option or the Implementations menu option.

Option 1: Implementation linked to a rule

The implementation will be created from the quality rule, in the Implementations tab.

There are 3 type of implementations you can create:

1) Form implementation: Clicking on the "New Implementation" button on the right hand-side of the screen will take you to a multi-step form which will guide you to create an implementation.

2) Raw/native implementation: If you want to set up a more complex implementation, click on "New Raw Implementation" button.

3) Basic implementation: Click on "Create basic implementation" to register implementations with just the basic information (id, result type, threshold and goal) without having to input additional information related to the dataset or validation.

Option 2: Standalone Implementation

There are two ways to create implementations when these are not linked to a quality rule:

1) From the list of implementations screen in the Data Quality module.

2) From a concept in the Business Glossary module if you want the implementation to be linked to a business concept.

There are 3 type of implementations you can create:

1) Form implementation: Clicking on the "New Implementation" button on the right hand-side of the screen will take you to a multi-step form which will guide you to create an implementation.

2) Raw/native implementation: If you want to set up a more complex implementation, click on "New Raw Implementation" button.

3) Basic implementation: You can also register implementations with just the basic information (id, result type, threshold and goal) without having to input additional information on the dataset or validation. In order to create this type of implementation, click on the 3-dot menu button and select "Create basic implementation".

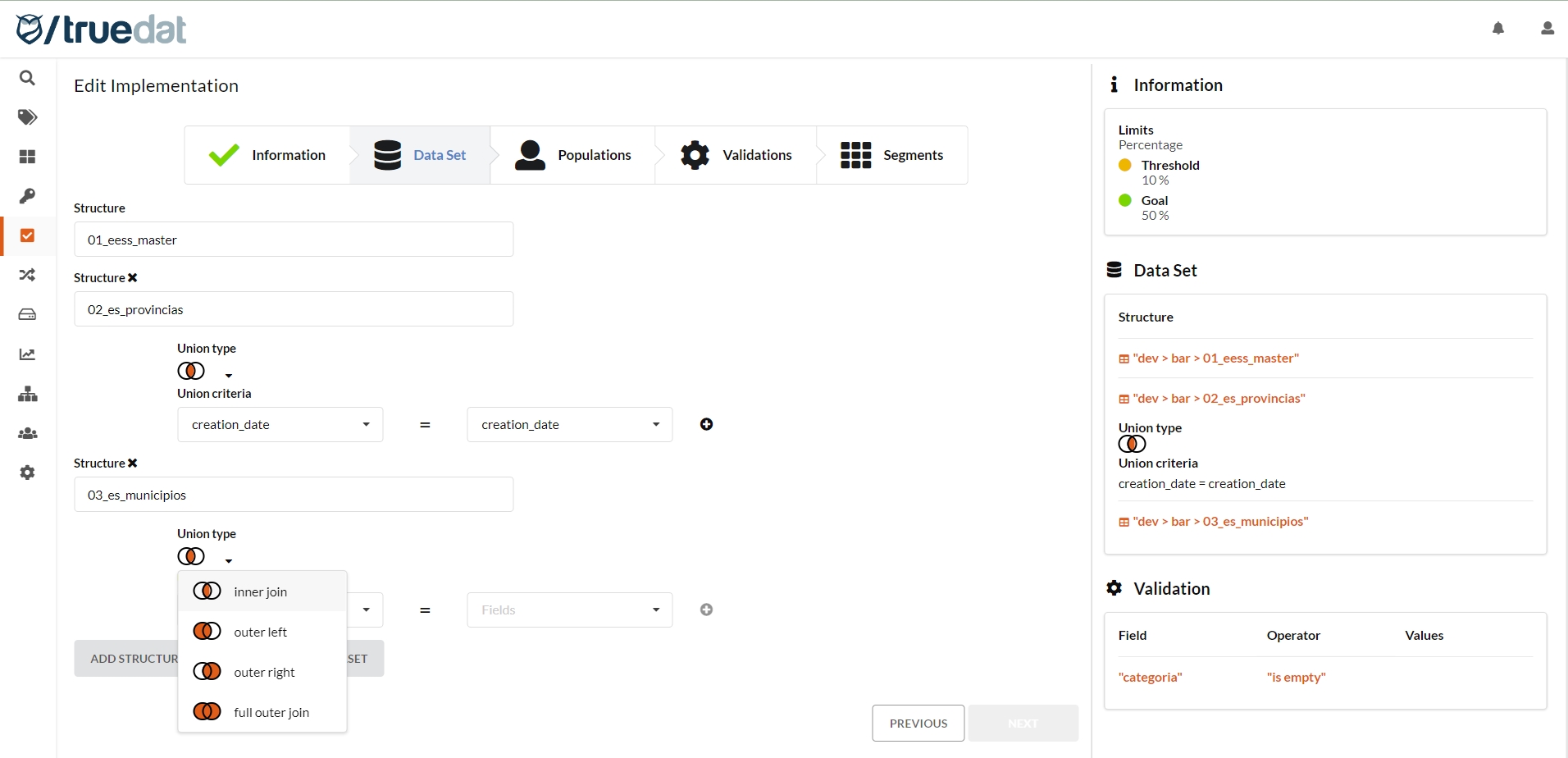

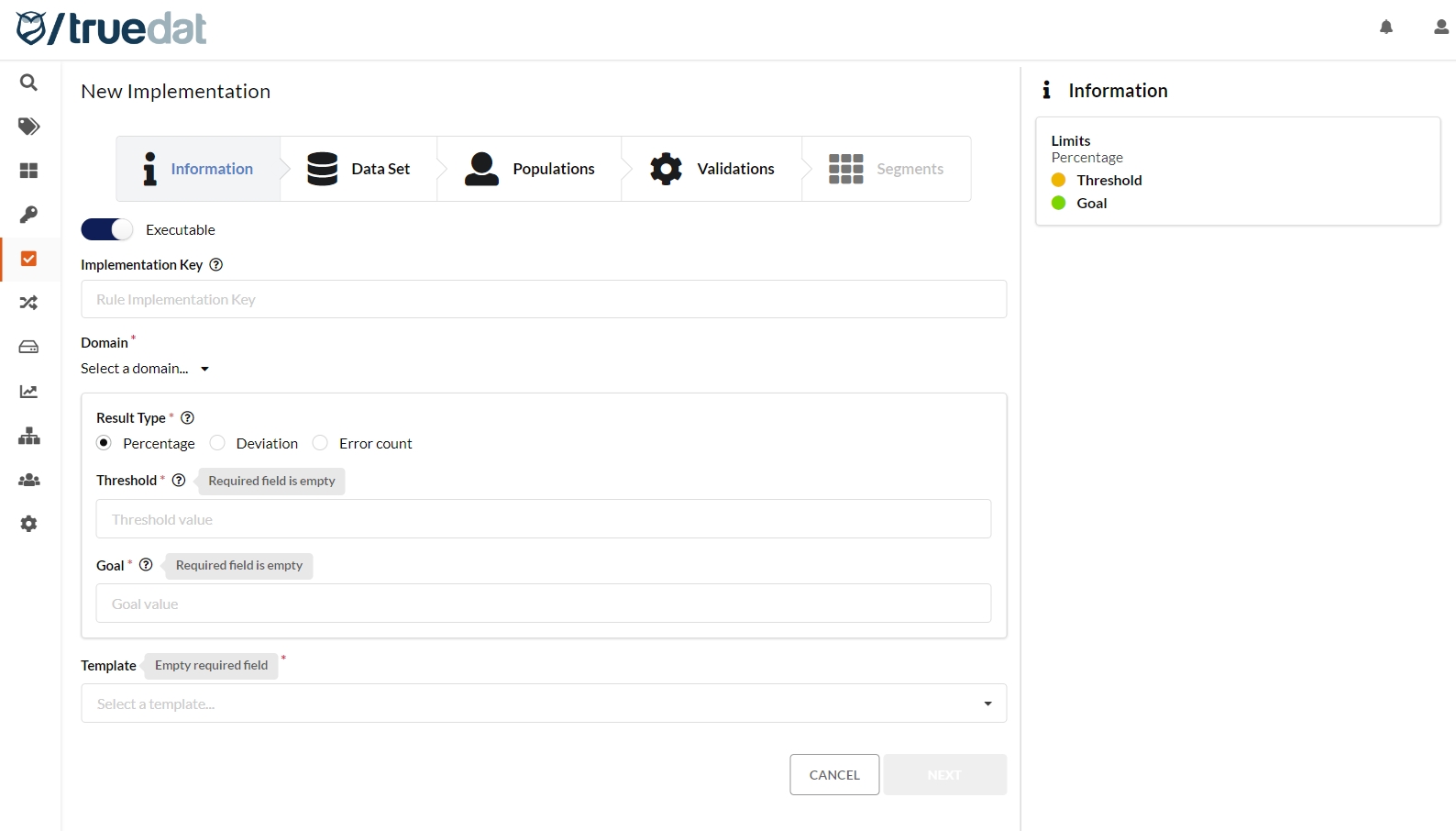

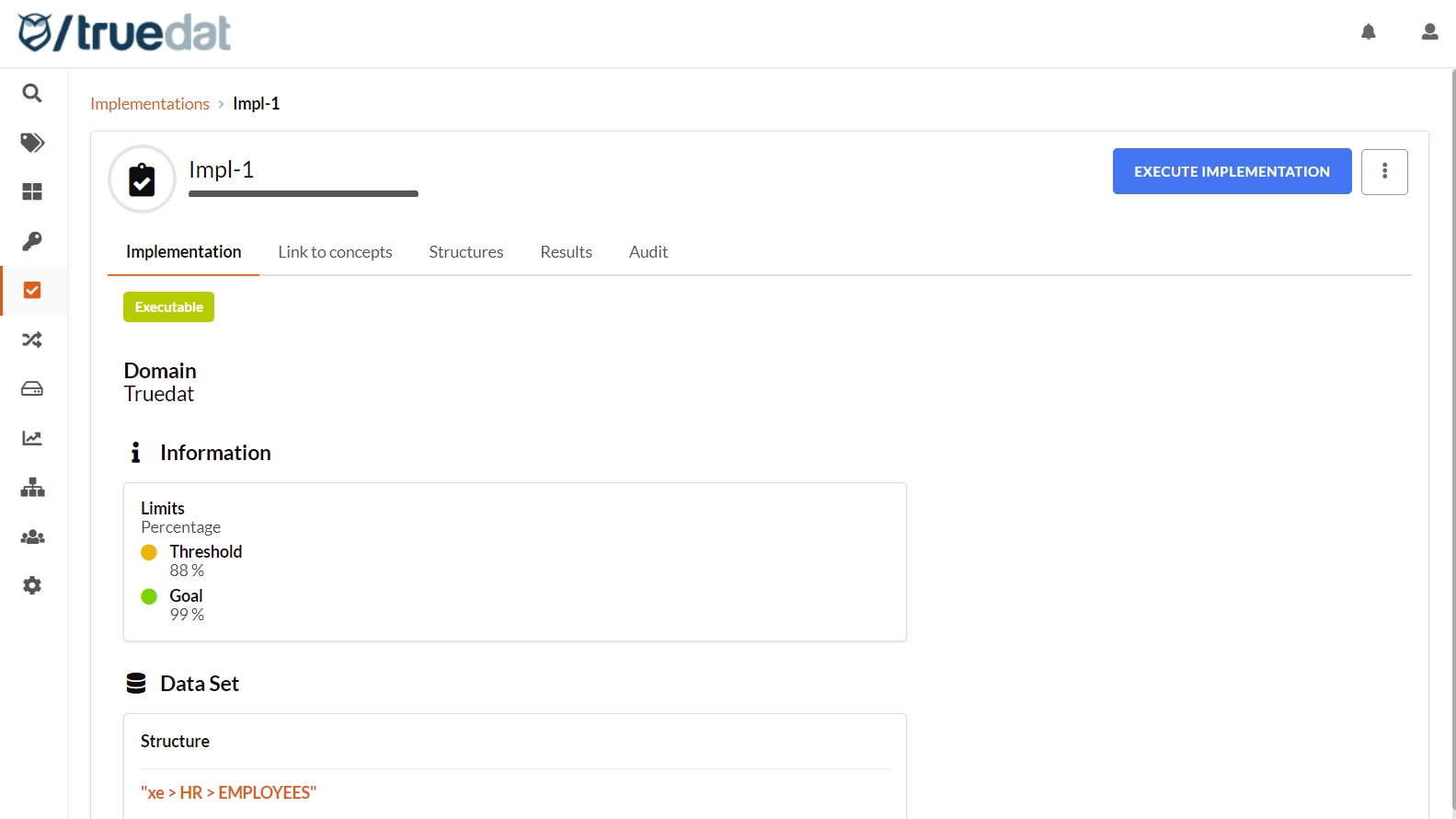

The implementation will be created by completing the following four sections:

Input the information associated to the quality implementation. You may introduce:

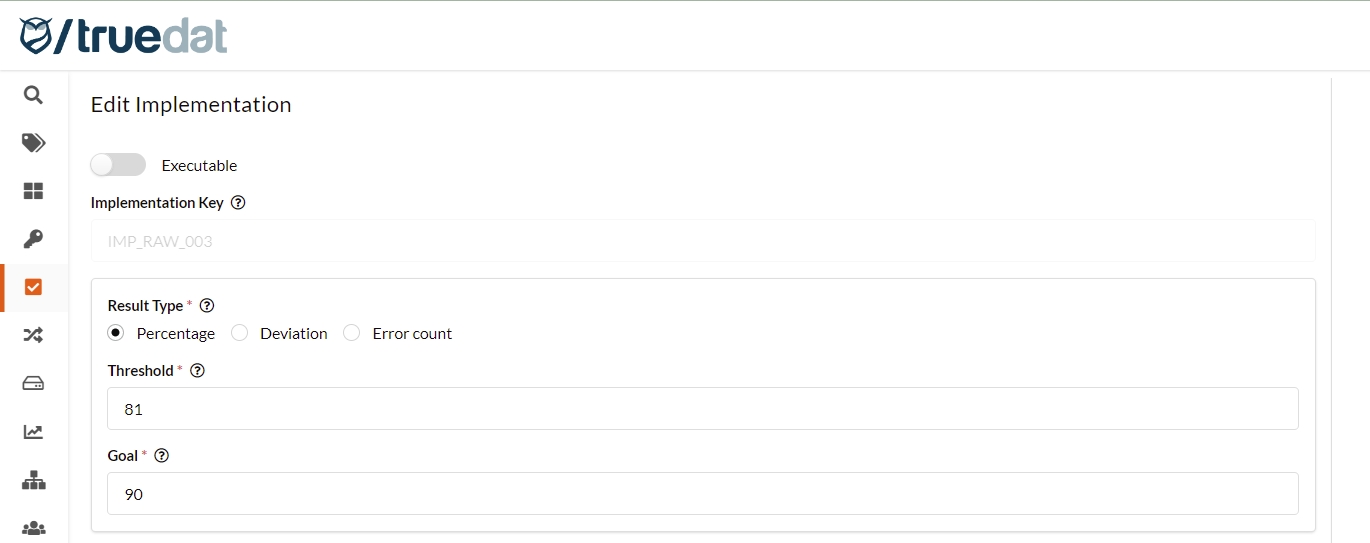

Executable: By default all implementations are available to be executed by Truedat connectors. In case that you don't want a specific implementation to be executed by Truedat's quality engine just uncheck this option. This is useful in case that you have integrated an external quality engine and don't want Truedat to try to execute something that is already being done by a third party.

Implementation key: Defines a unique indentifier. You may not use an existing identifier. In case that you do not input any value an identifier will be autogenerated

Result type: We will define whether with this quality rule we want to measure compliance based on a quality percentage, deviation or an absolute number of errors.

Quality percentage: The measure will be the % of records that match the quality criteria. Values will be between 0% and 100%, being 100% the maximum quality of our data.

Deviation: Used to compare two counts/amounts that should be similar. It will give the % of difference between the data to be checked and the data to be checked with. Values will be between 0% and 100%, having 0% as the max quality for our data.

Erros Number: Used to check the absolut number of errors in our data without dependency on the volume of our data. Very useful in cases with high volume of records and small margin of error. Values will be positive integers, having 0 errors as the best quality possible.

Threshold value: Minimum value resulting from the execution of the rule. Below this value we will consider that a quality error has occurred.

Goal value: Value to be reached for the defined rule. Between the threshold value and the goal value, we will consider that a quality alarm occurs.

Dynamic information: Fill out the information defined by your Quality Implementation template in case that you have defined one.

The data set on which the quality is going to be meassured. To do this you must select one or more structures from the data catalog and you can as well use a reference data table in case you have set them up in Truedat.

It will be possible to join information from several tables as well as to join a table to itself. For this, it will be necessary to select the type of join (inner join, left outer join, right outer join or full outer join) and which are the fields of both structures that have to be used to make the union.

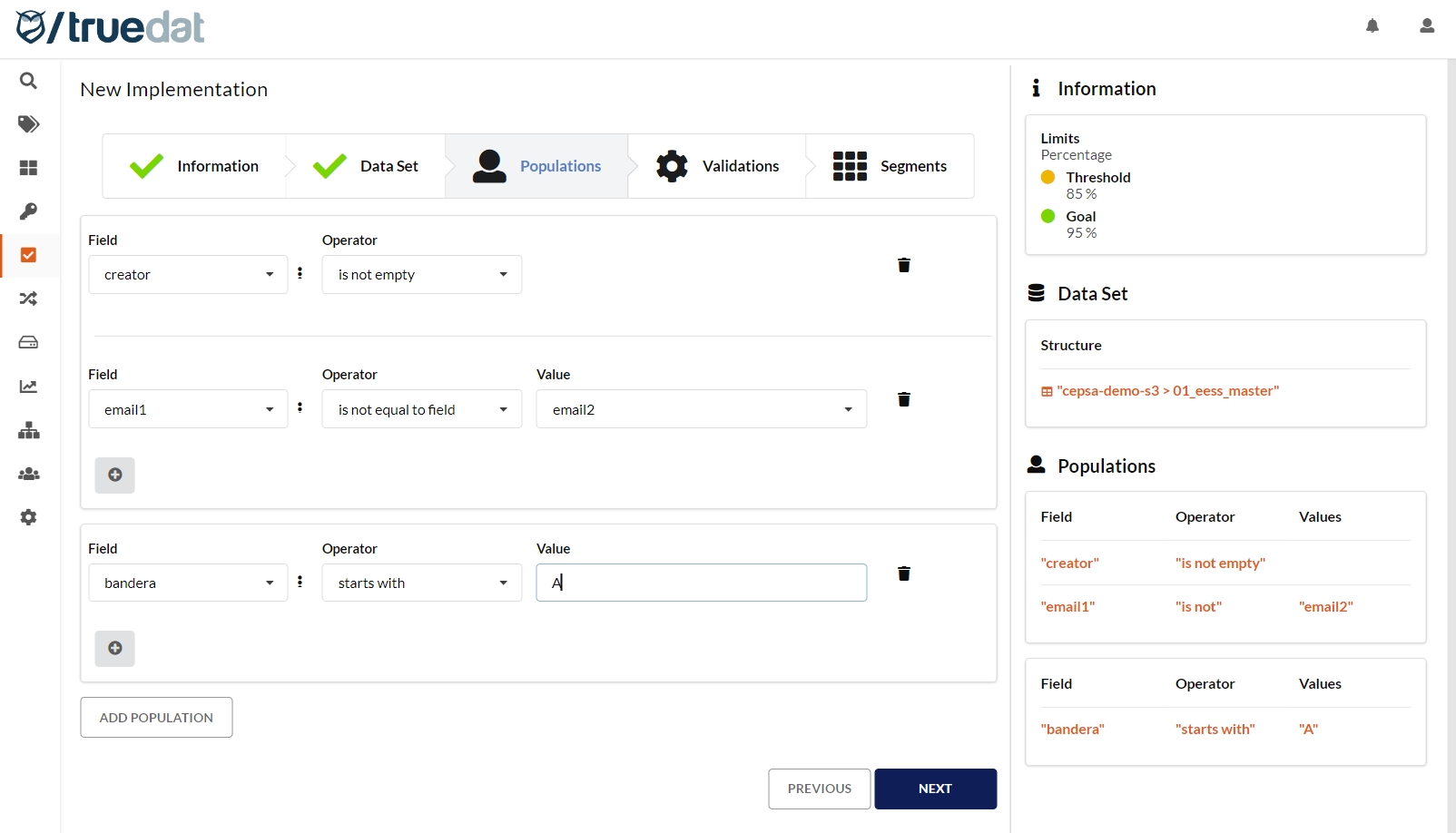

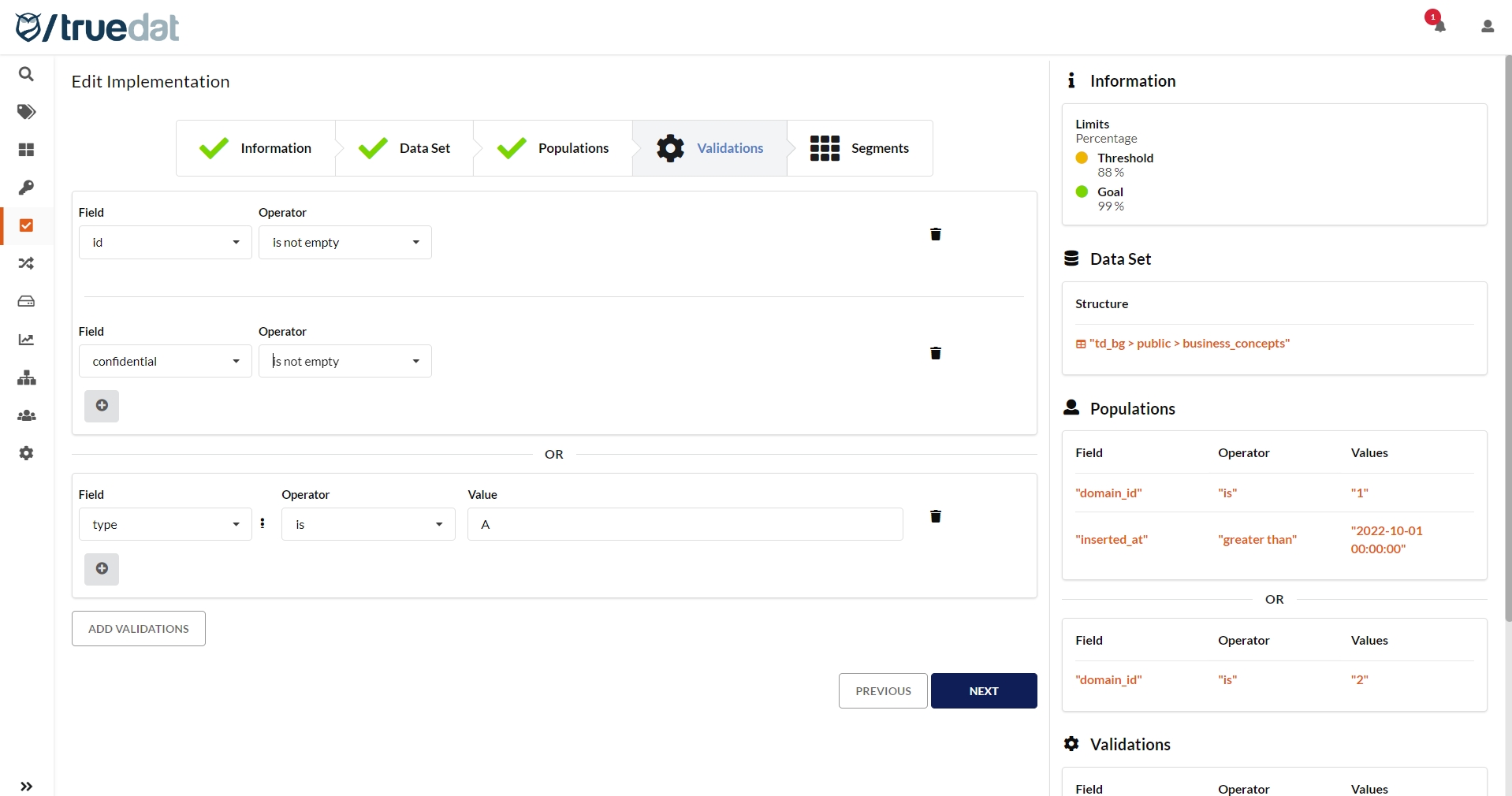

The validation can be applied to the whole data set defined in the previous step but it can also be applied to just a subset of that data set. To do this, you can apply filters to define the population where the validation is to be applied. It is actually possible to define more than one population in the same dataset.

The measure that will be obtained when this implementation is executed will be defined by the number of records that meet the validations specified here.

For both the population step and the validation step, we will use some operators defined in the application. The product comes with some default operators but in each installation the operators to be used can be customized. It is important to emphasize that any change in the operators will imply changes in the quality engine if it is integrated into our installation.

An operator will always be applied to a field in the selected data set and may or may not have, depending on the operator, additional parameters.

Operators have the following characteristics:

Data types: The data type will be displayed when selecting a field both in the population step and in validation. Depending on the selected type, we will have valid operators available for that type. Examples

Number: For a data type of number we will have operators of the type greater, less or equal, etc.

Text: For a data type with text format we will have operators that compare the length of said text.

Date: For a type of date data we will have an operator that will allow us to know if it is the last day of the month.

Scope: There will be operators that are available both in the population step and in the validation step, but others that do not make sense so they will only be available in one of the two steps. For example: The operator to check the format of a data is found in the validation but not the population filter.

Groupings: For a better understanding in the selection of the operator, some of them are shown grouped.

Parameters: Depending on the operator, a series of parameters may be defined, which may be values entered by the user or other fields of the selected data set. Examples:

No parameters: The "Is empty" operator does not need parameters.

One parameter: The "Is greater than" operator will require the user to enter the minimum value to be checked.

Two parameters: The "Between" operator will require the user to enter the minimum and maximum values to be checked

Value of a given list: The operator "Has a format of" will show us a drop-down list with the available formats to check: date, number, DNI, etc.

Another field of the selected data set. The "equals field" operator will require the user to select a field from the data set. To do this, a drop-down will be displayed where you can search and select the indicated field.

Another field in the data catalog: The "Referenced in" operator will allow you to select any field in the data catalog to perform a referential integrity test.

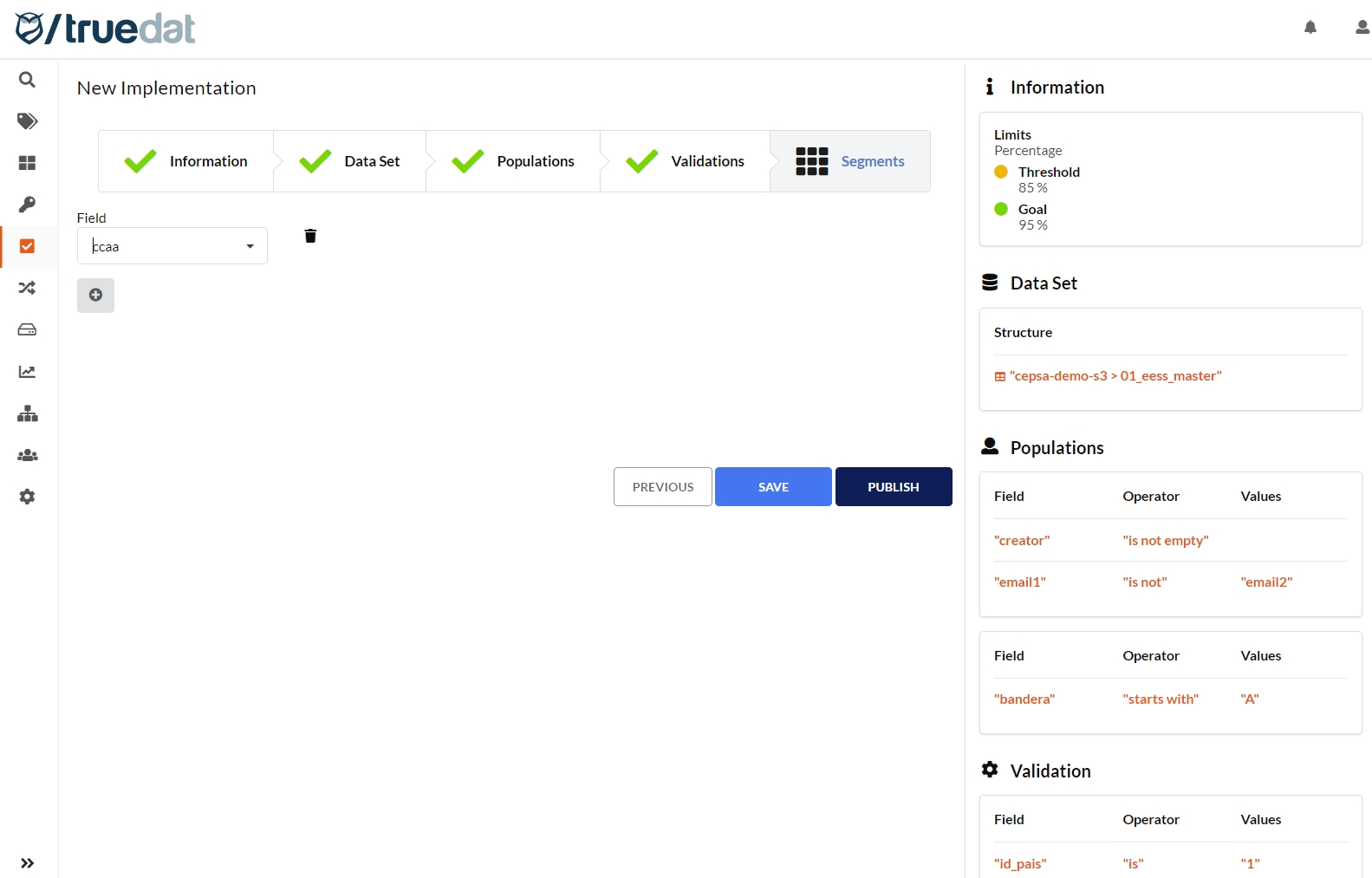

In this step you could define the drill-down criteria so the quality results will be presented not only as a total but also by the segmentation defined. This step is optional and only users with permission "Manage Implementation Segments" will have this option available.

Once these steps are completed, the implementation is created. If you have permissions to publish implementations you will have the option to either save the implementation in Draft status or directly in Published status.

For this implementation to be executed in an automated way, the installation must have been integrated with a quality engine for the system on which we want to execute said executions.

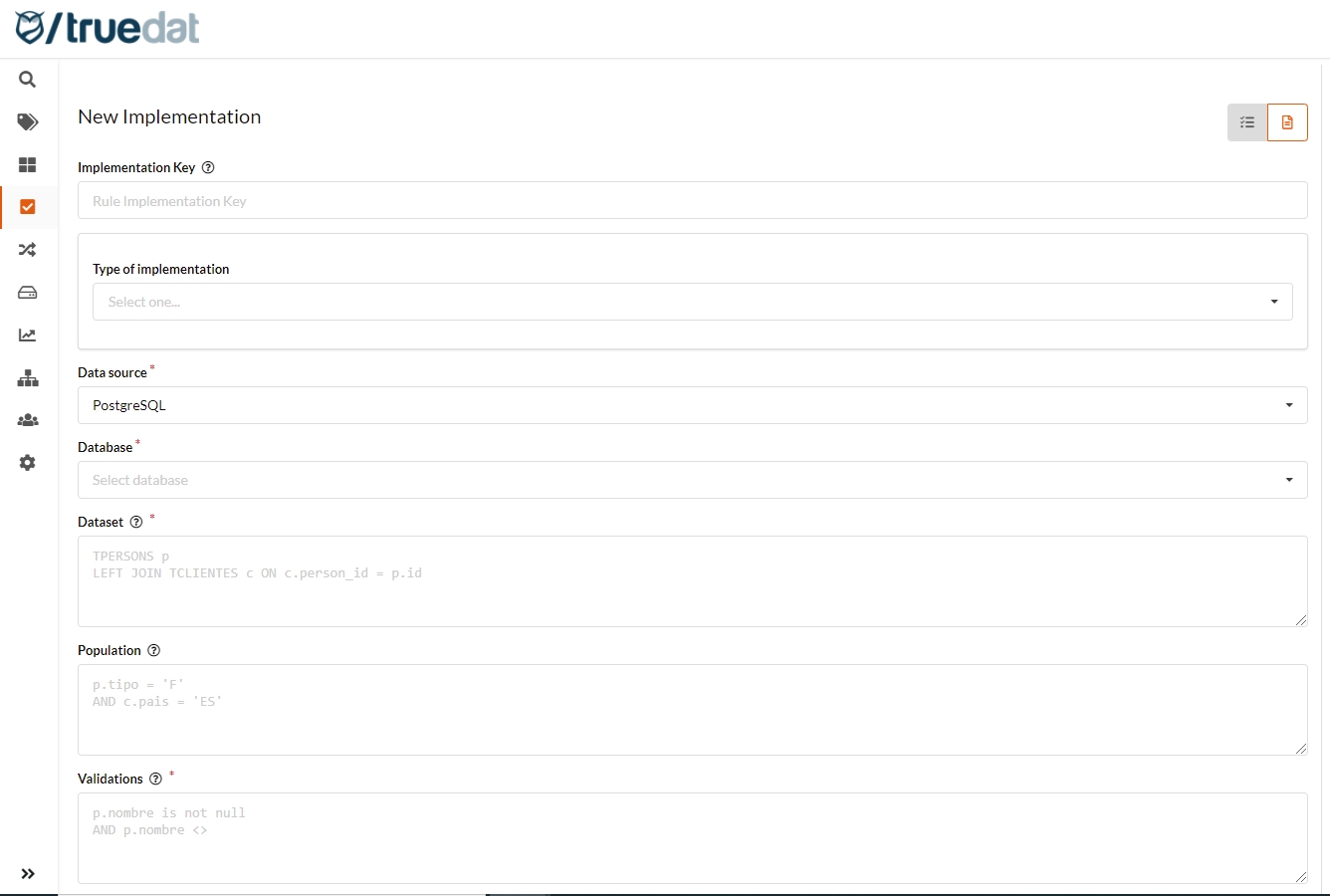

Open source implementations can be registered as well. The person who creates the implementation must know the system where the implementation is going to be executed in order to use the correct syntax in the target system. In this type of implementations, the following values must be filled in:

Implementation Key: Defines a unique indentifier. You may not use an existing identifier. Identifiers do not allow spaces or restricted characters. In case that you do not input any value an identifier will be autogenerated

Dynamic Information: As defined by your implementation template if any is defined

Data Source: On which the validation is going to be executed.

Database: In case that the data source needs a data base to be selected.

Dataset: The data set on which you want to perform the validation is defined. In an SQL statement in this field we would include the FROM section of the query where joins and aliases can be entered for the tables.

Population: The filter to be performed on the data defined in the previous point is introduced. This will be used to define a subset of the data on which you want to perform data validation. In an SQL system this field will have to have a valid syntax to enter in the WHERE of a query.

Validation: You enter the validation you want to perform on the data selected in the data field and filtered in the population field. In an SQL system this field will have to have a valid syntax to enter in the WHERE of a query.

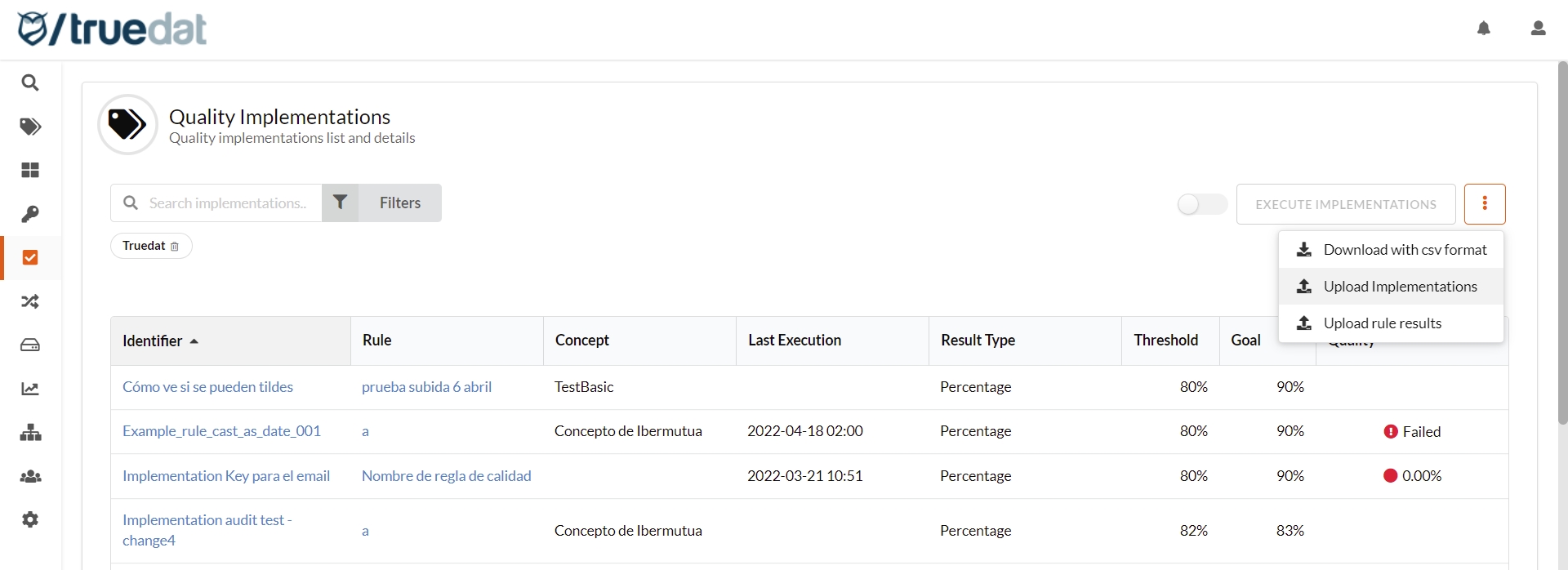

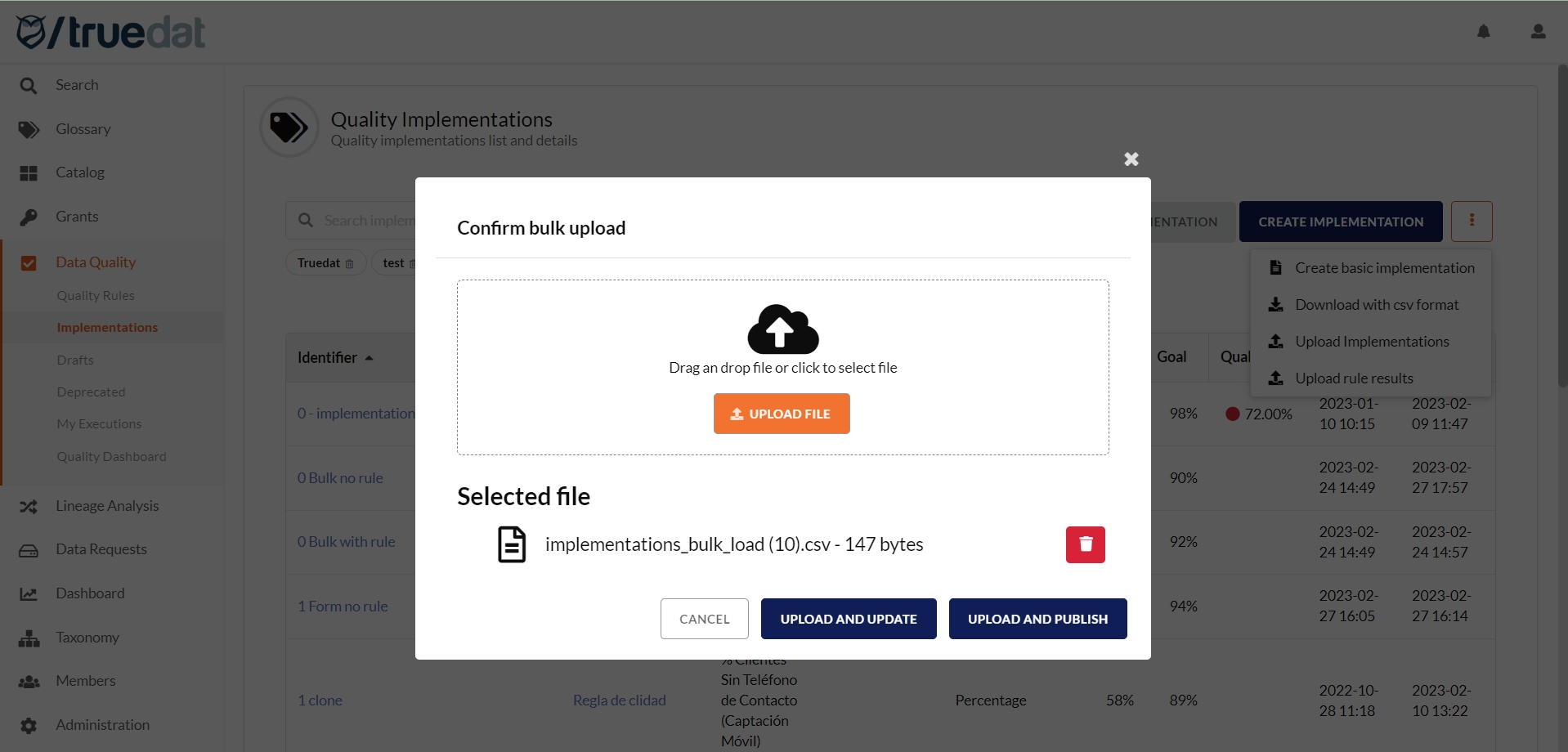

It is possible to bulk upload implementations from a csv file but only of the 'Information' fields. The format of the file will depend on the template define in your installation. In order to do this upload of information, go to the Implementations screen and in the menu on the 3 dots button, select 'Upload implementations'.

If you have permission to publish implementations, you can choose to just upload the implementations in draft status or directly upload them in published status.

File format:

implementation_key (optional): only required to update existing implementations. leave it blank to create new ones.

domain_external_id: external id of the implementation.

result_type: this can be either percentage or errors_number or deviation.

minimum: threshold value.

goal: goal value.

template: name of the template.

template fields: use their name (not label).

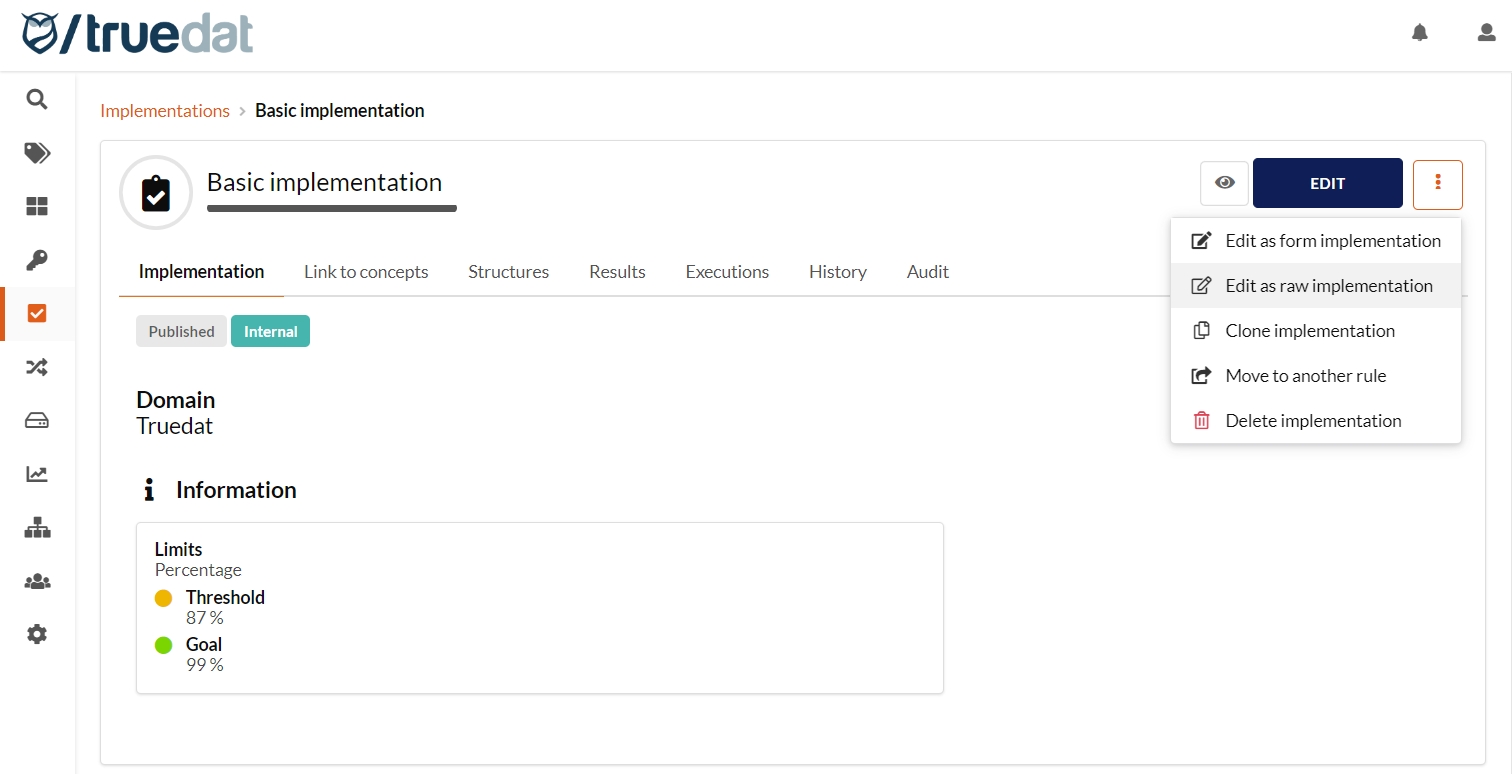

The implementations loaded this way will be of the type basic implementation with just the basic information. But you can edit them afterwards to complete the information related to Data Set, Population (optional), Validation and Segmentation (optional). You can add this information by completing the multi-step form or the raw implementation form just by choosing the relevant option: "Edit as form implementation" or "Edit as a raw implementation".

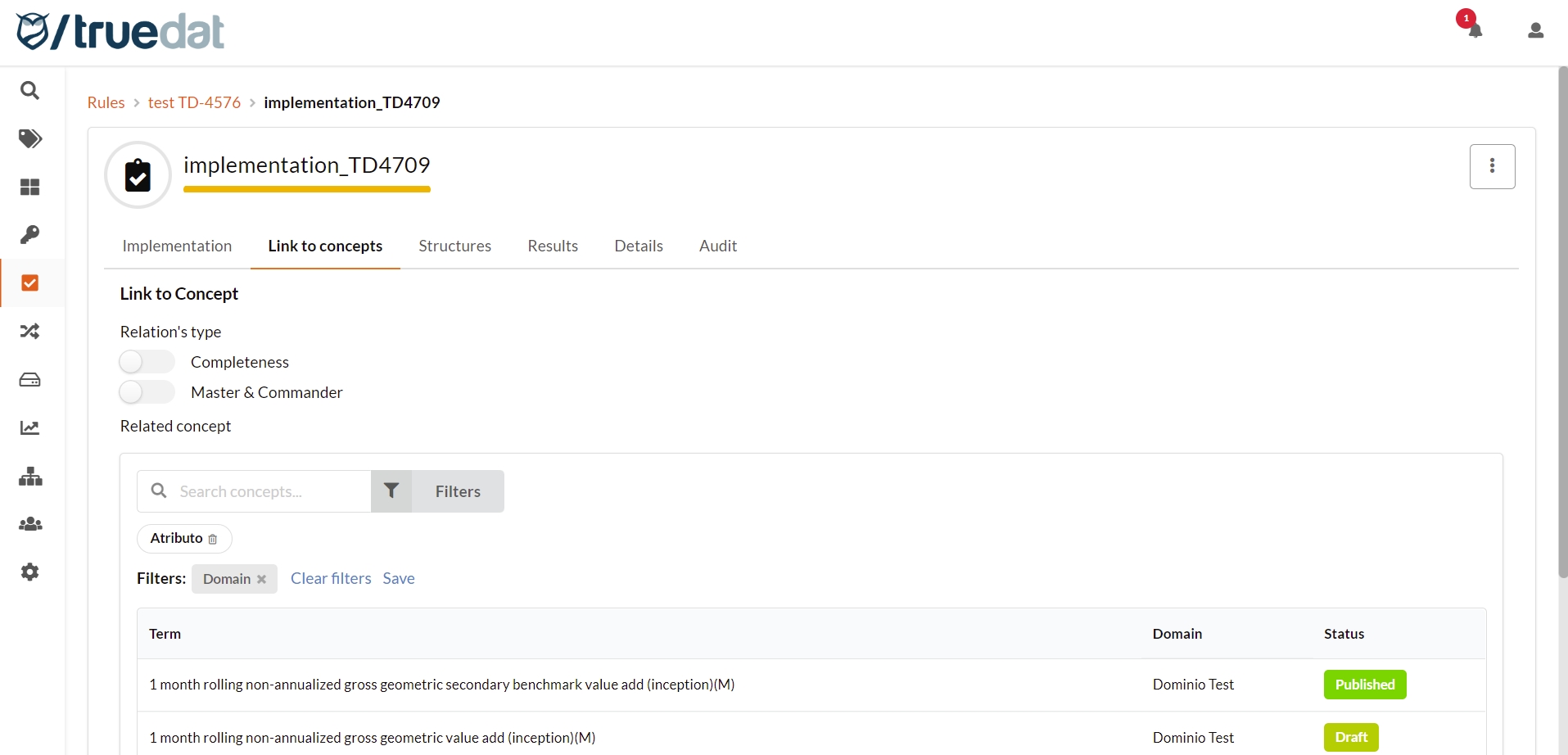

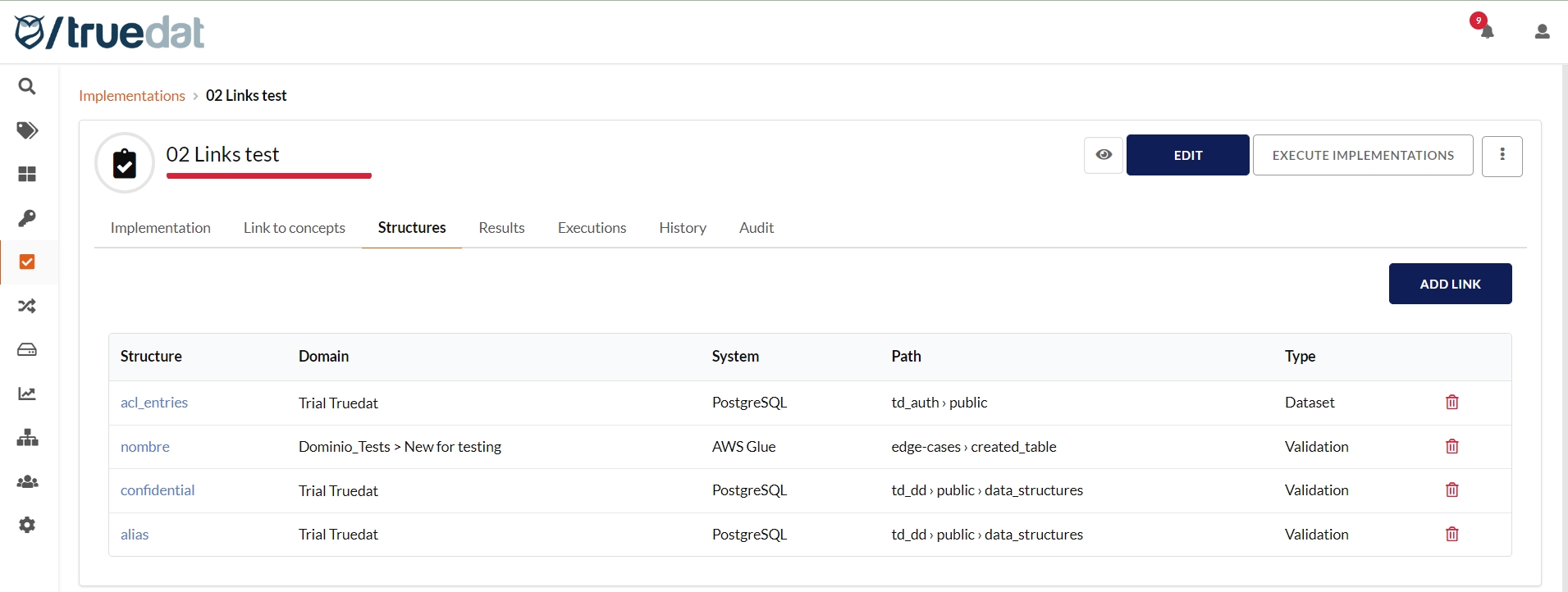

Quality implementations can be linked to business concepts on the tab 'Link to concepts'. You can define different type of relationships in the Admin module.

Quality implementations are automatically linked to data structures used in the dataset and in the validations and are displayed in the tab "Structures". You could delete and add links in case the ones automatically proposed by the tool are not correct or relevant, which may be the case of Raw implementations where the tool extracts the information from the sql code and may not be accurate.

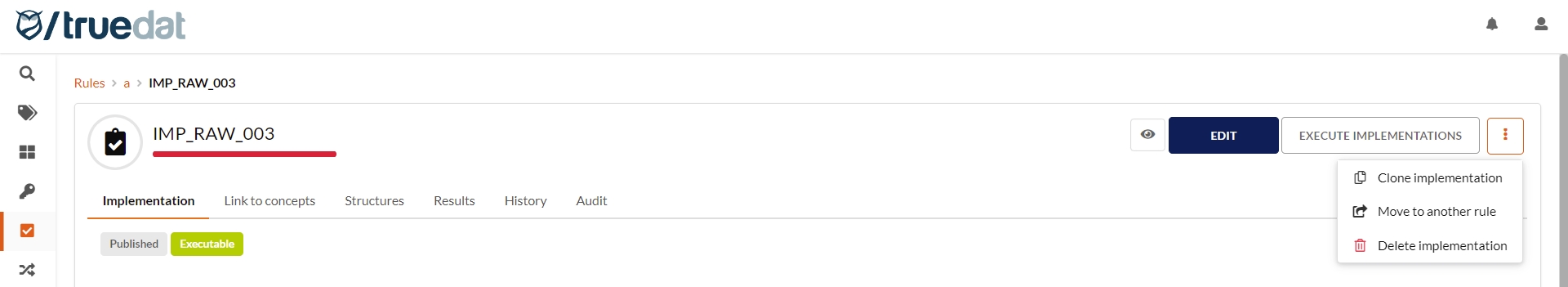

If you edit a published implementation, a new version of the implementation will be created and this new version may go through the approval workflow if required.

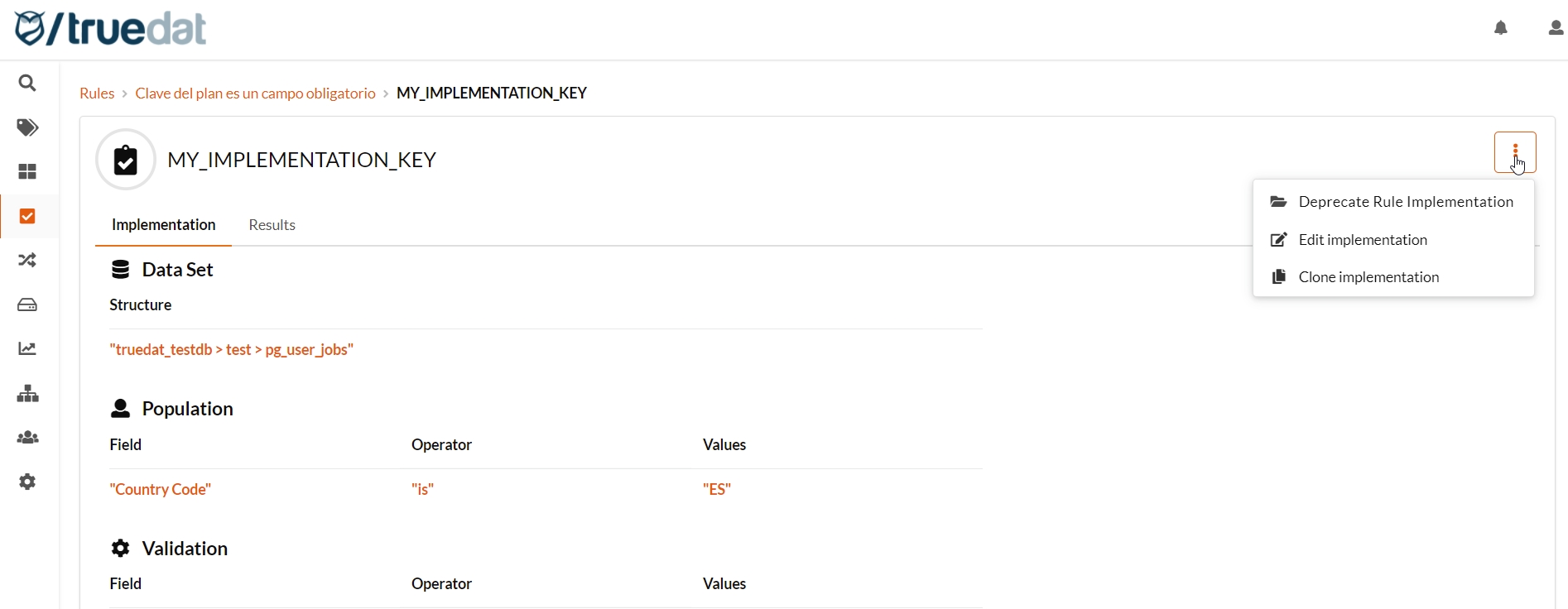

Basic implementations can be edited to include information regarding dataset, population and validation. In order to do so, you can either edit them as form implementation where you will complete the multi-step form or edit them as native form. From the implementation details page, click on the 3-dot menu button and select the corresponding option.

You can also upload a file to update implementations but only the general information (type of result, goal, threshold and any field of the custom template) and not the information related to the dataset, population, validation or segmentation.

There is the option to clone existing quality implementations in a simple way:

The new quality implementation will inherit all parameters from the previous implementation except the implementation key, as a new key will have to be defined for this new implementation.

The new implementation will be linked to the same rule as the implementation it was cloned from.

The concepts linked to the implementation are also copied to the cloned implementation.

You will be able to deactivate your quality implementations. This will avoid them to be executed through the scheduled process but mantaining the information for your dashboards. In order to do this, edit the implementation and turn off the slider 'Executable'.

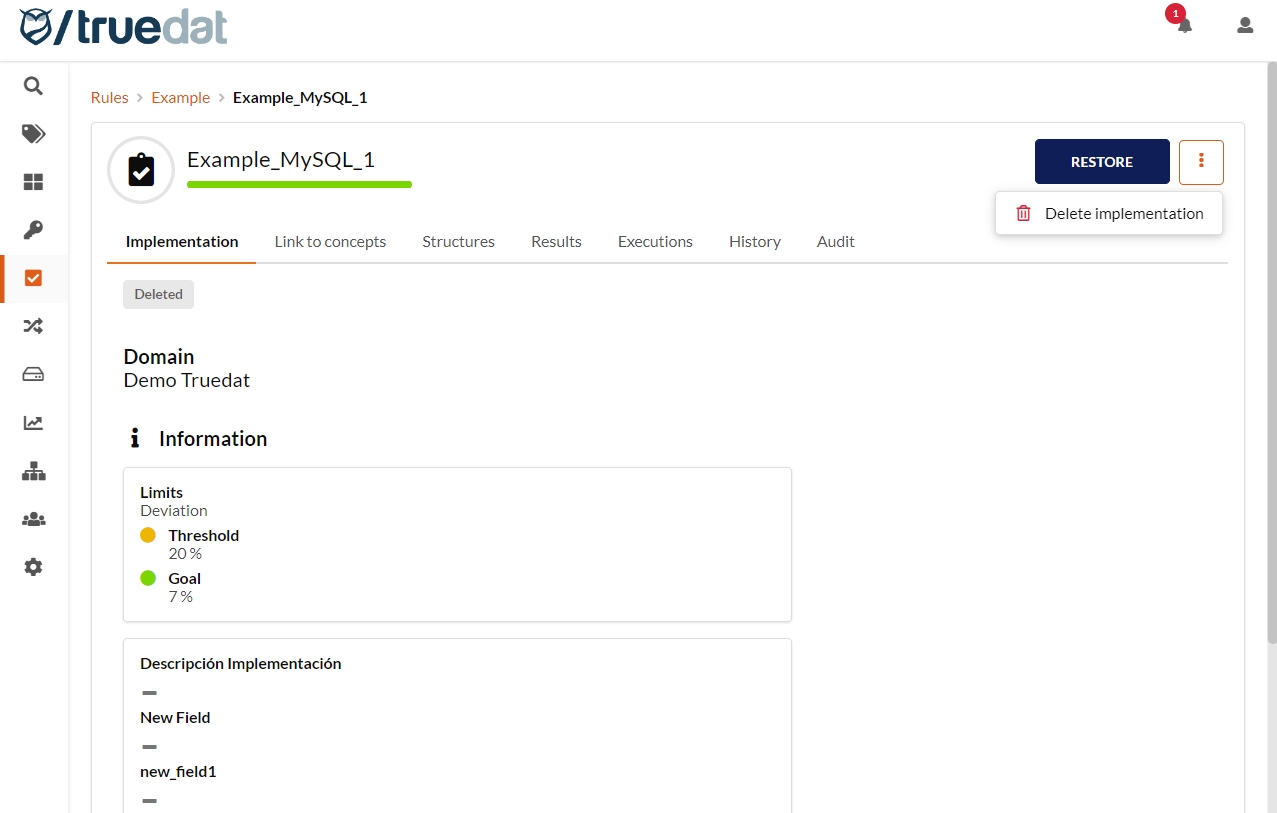

If you no longer want to have an implementation, it can be deleted if you have the permission to "Review Implementations". Click on the button on the right hand side and select Delete implementation. By doing this the implementation will be marked as 'Deprecated'

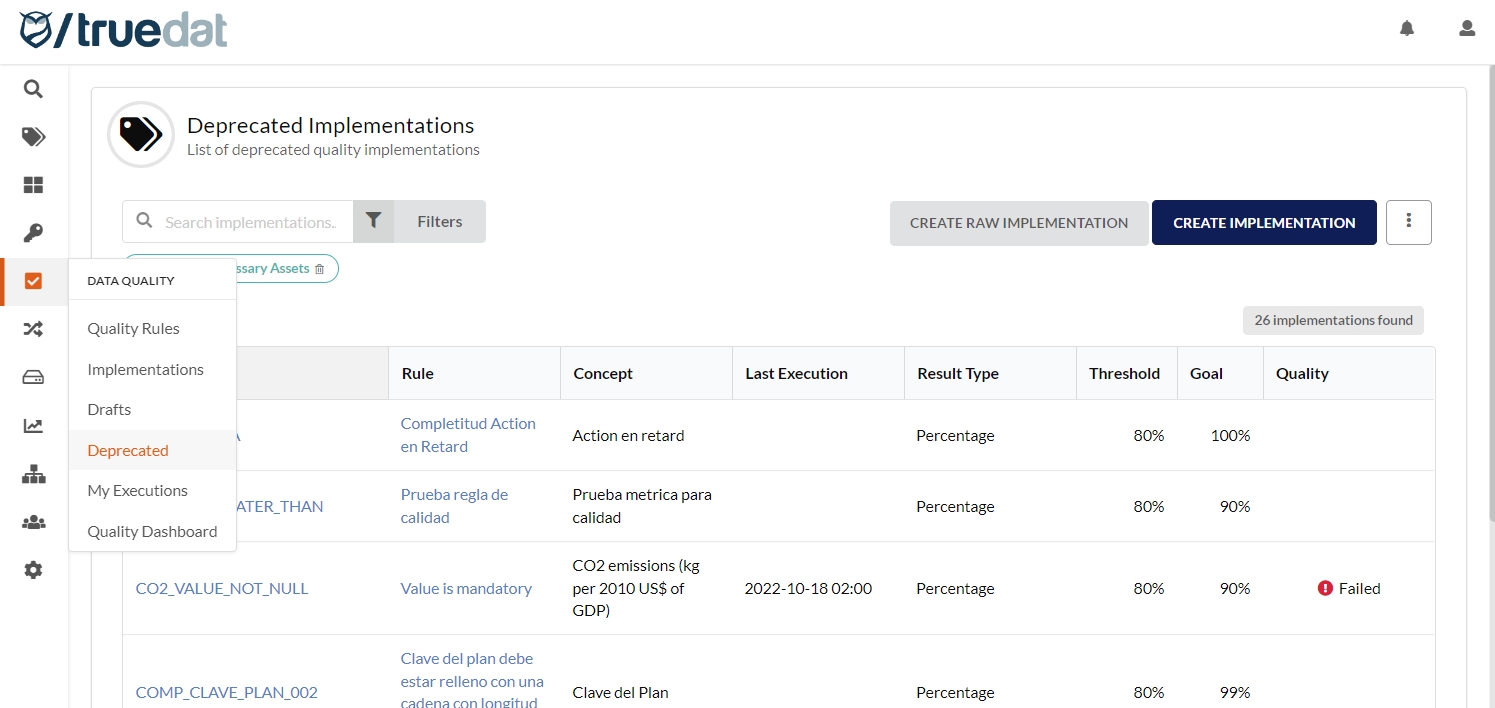

All deprecated implementations can be accessed from the DATA QUALITY menu, in the Deprecated sub-menu option. From this list you can view the deprecated implementations and either restore them and bring them back to Published status or permanently delete them.

Users with permissions can request the execution of quality implementations. In order to have these implementations run the data source needs to be correctly setup with data access.

There are two ways to execute implementations:

1) From the implementations screen there is a slider next to the button "Execute Implementations" to enable the request of executions. Once it is activated, select the implementations to run and press the execute implementation button.

2) From the implementation's details screen in case you want to execute just a specific implementation.

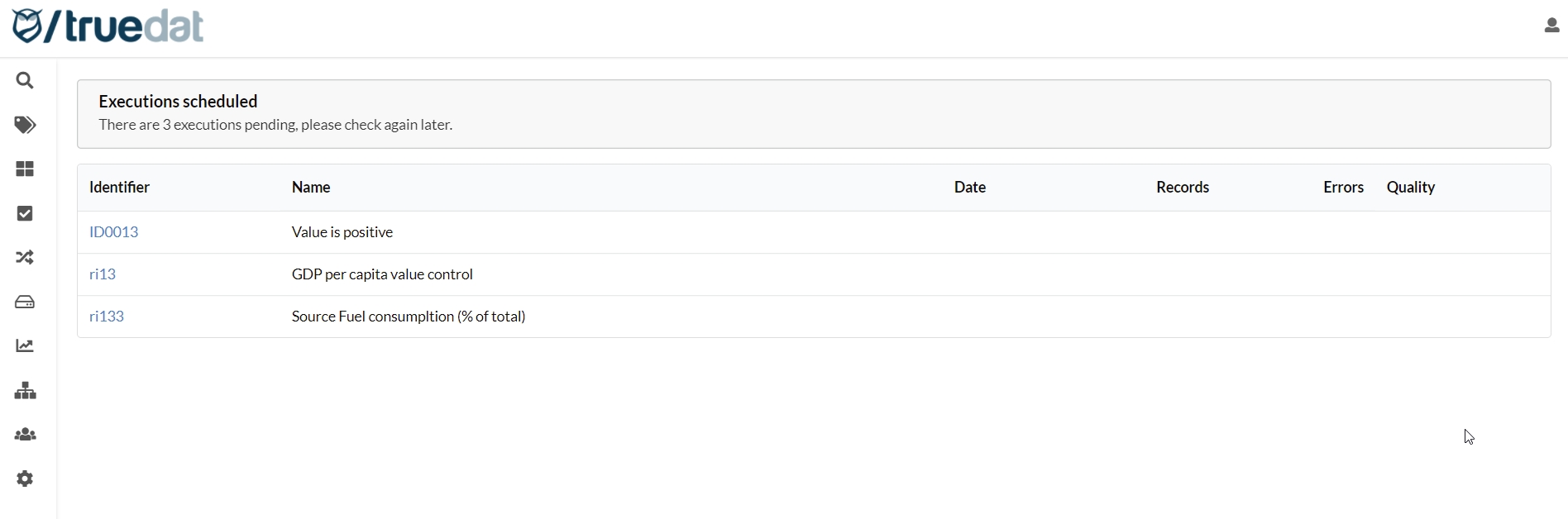

Once the execution has been requested, you will be redirected to a screen where the progress of the execution can be monitored. Refresh the screen to see the status.

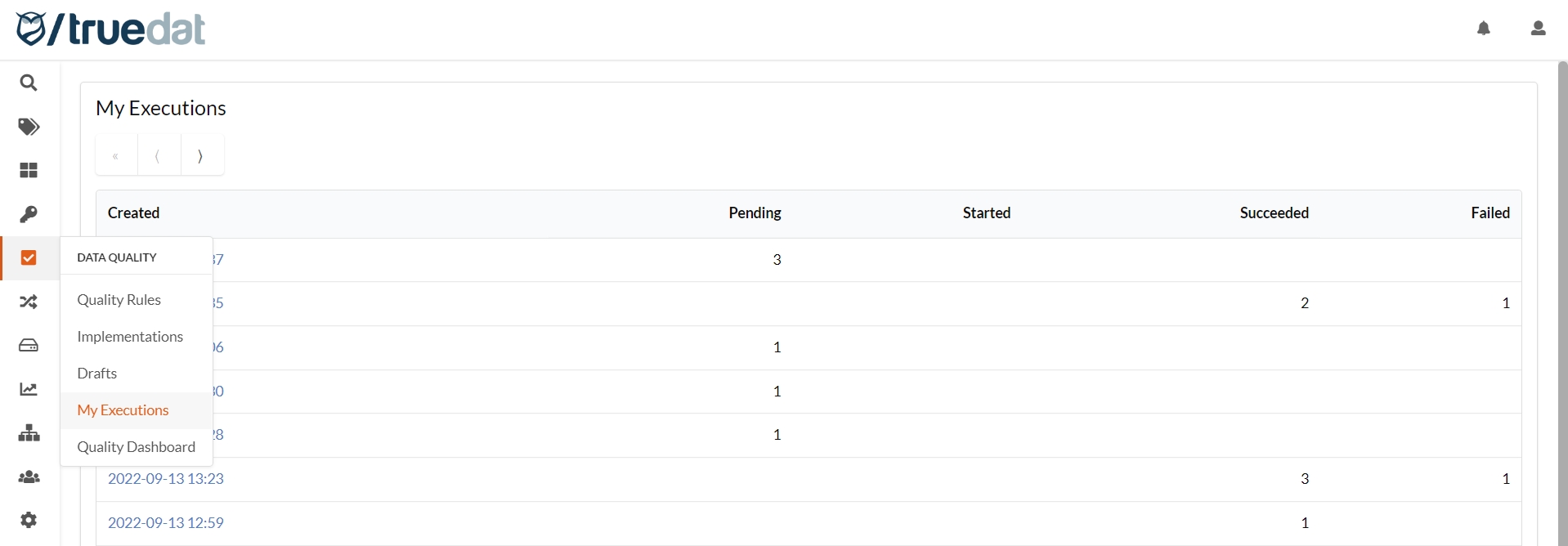

You can view a list of the last 50 executions you have requested by going to the menu option "My Executions” under "DATA QUALITY".

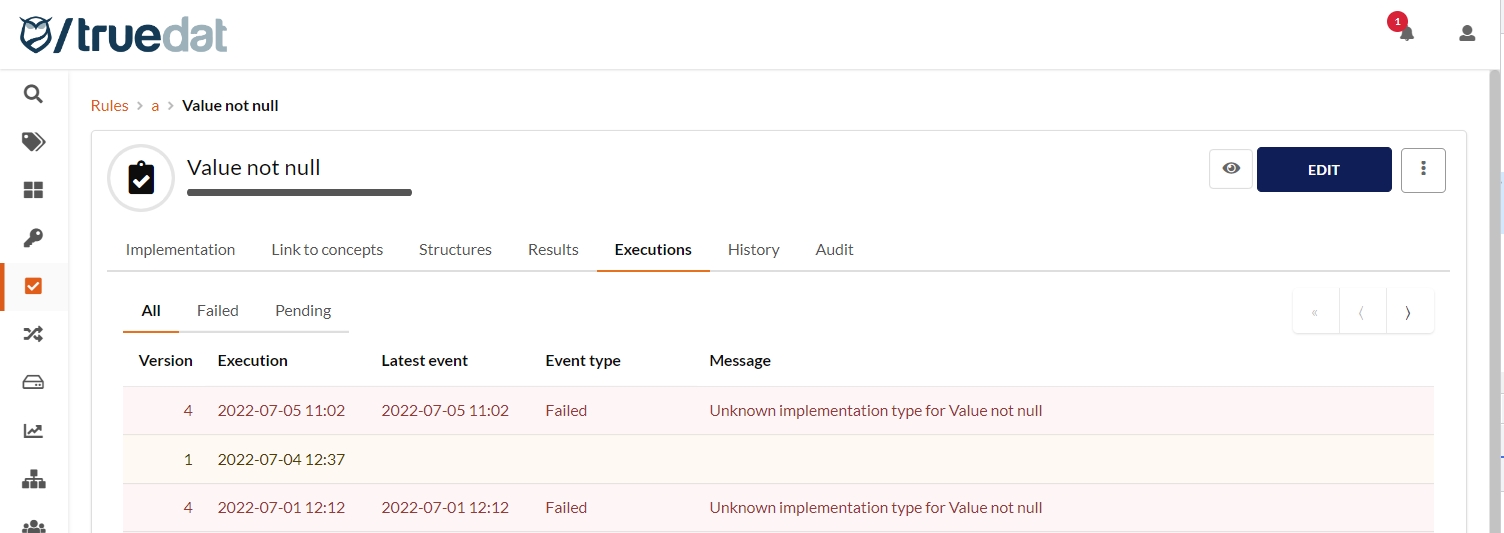

A list of all the executions of an implementation can be viewed going to the implementation and in the Executions tab. You can filter to view failed executions and pending executions.

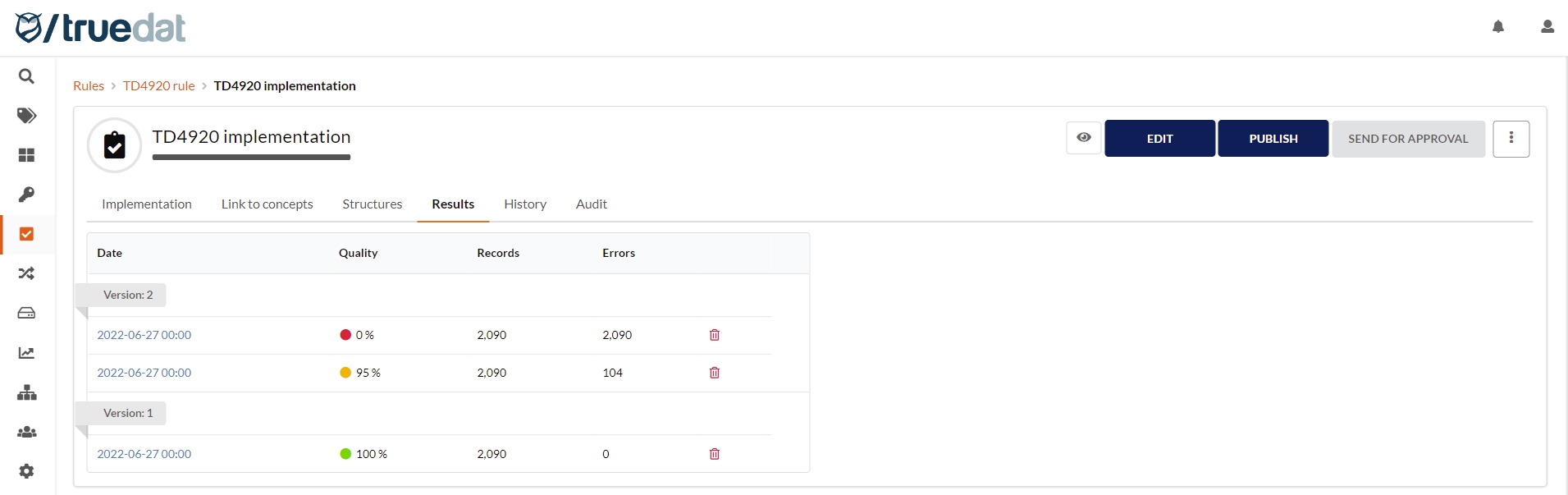

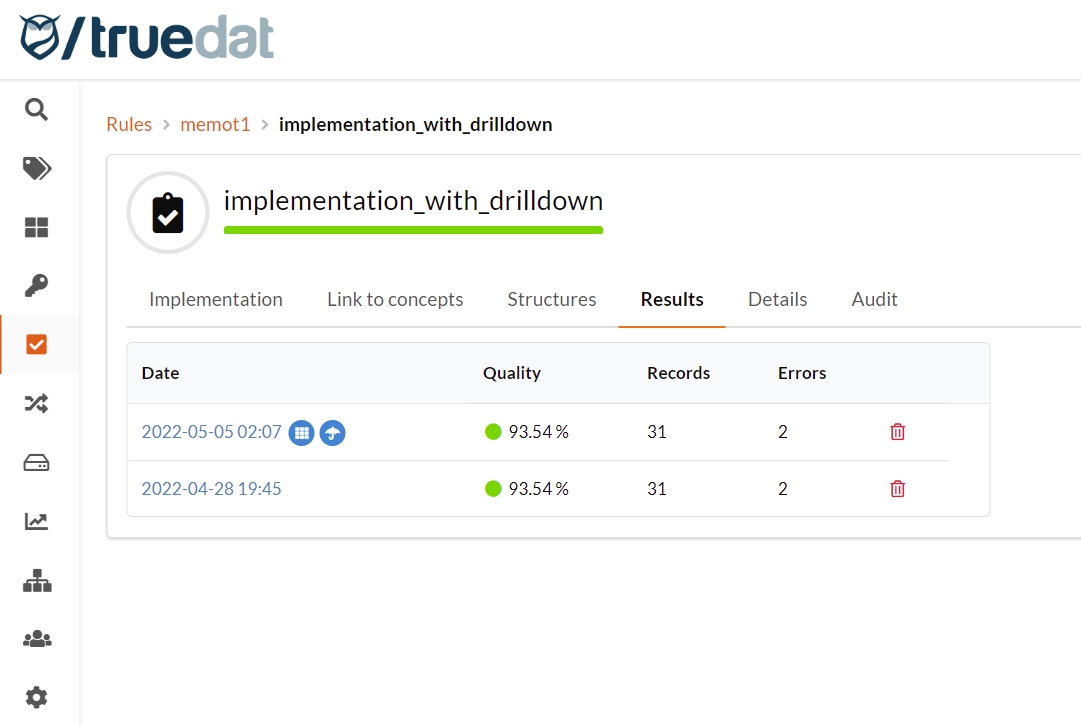

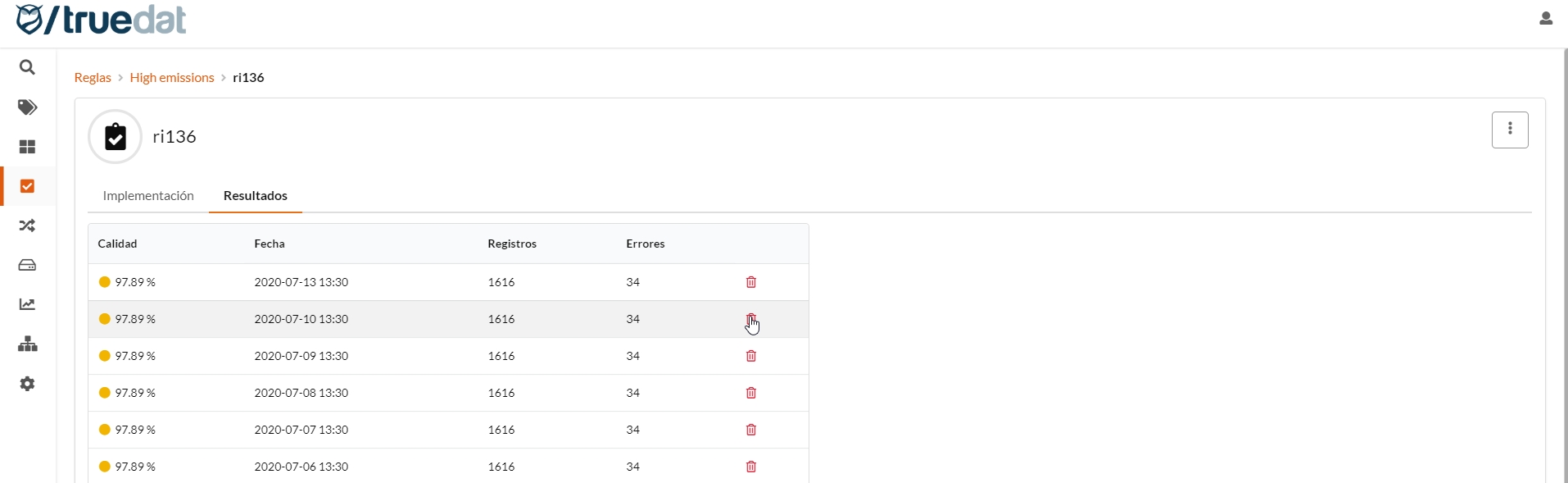

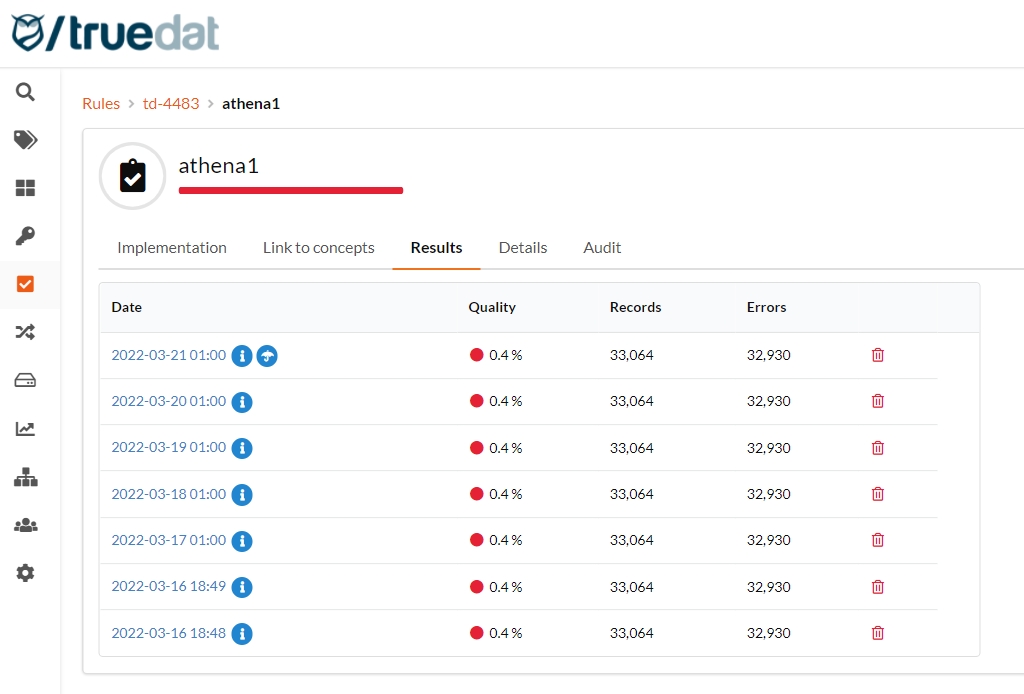

The data quality module is prepared to receive and store quality execution results, being able to display them to the user on the screen. In case of receiving results, the result of each of the implementations of the rule will be displayed.

Additionally, by clicking on an implementation we will be able to see the history of all its executions.

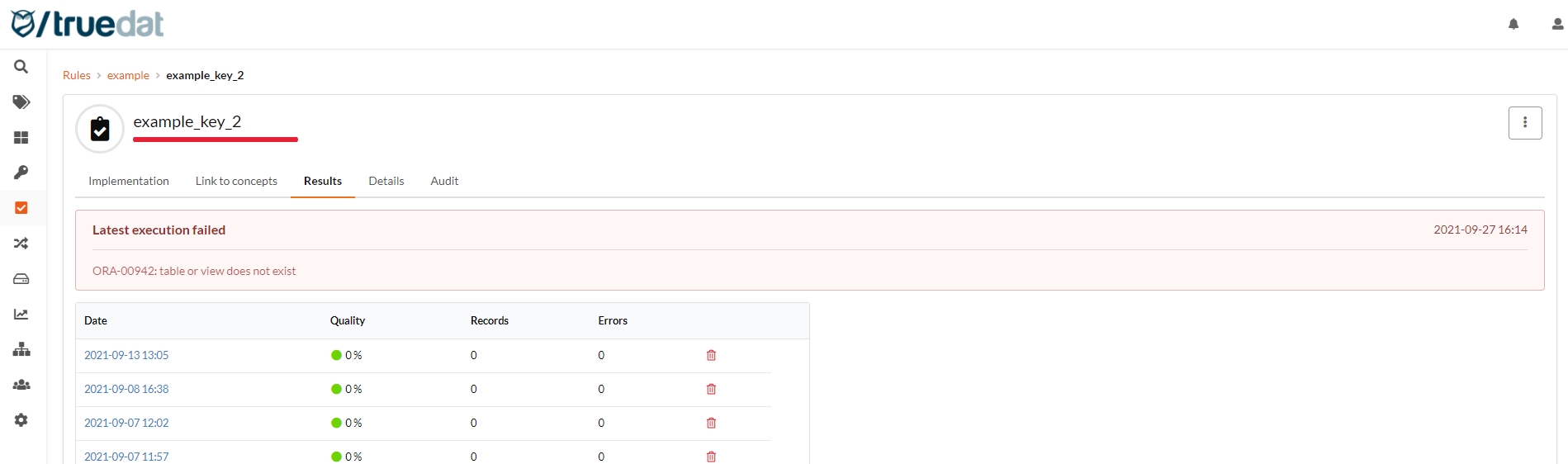

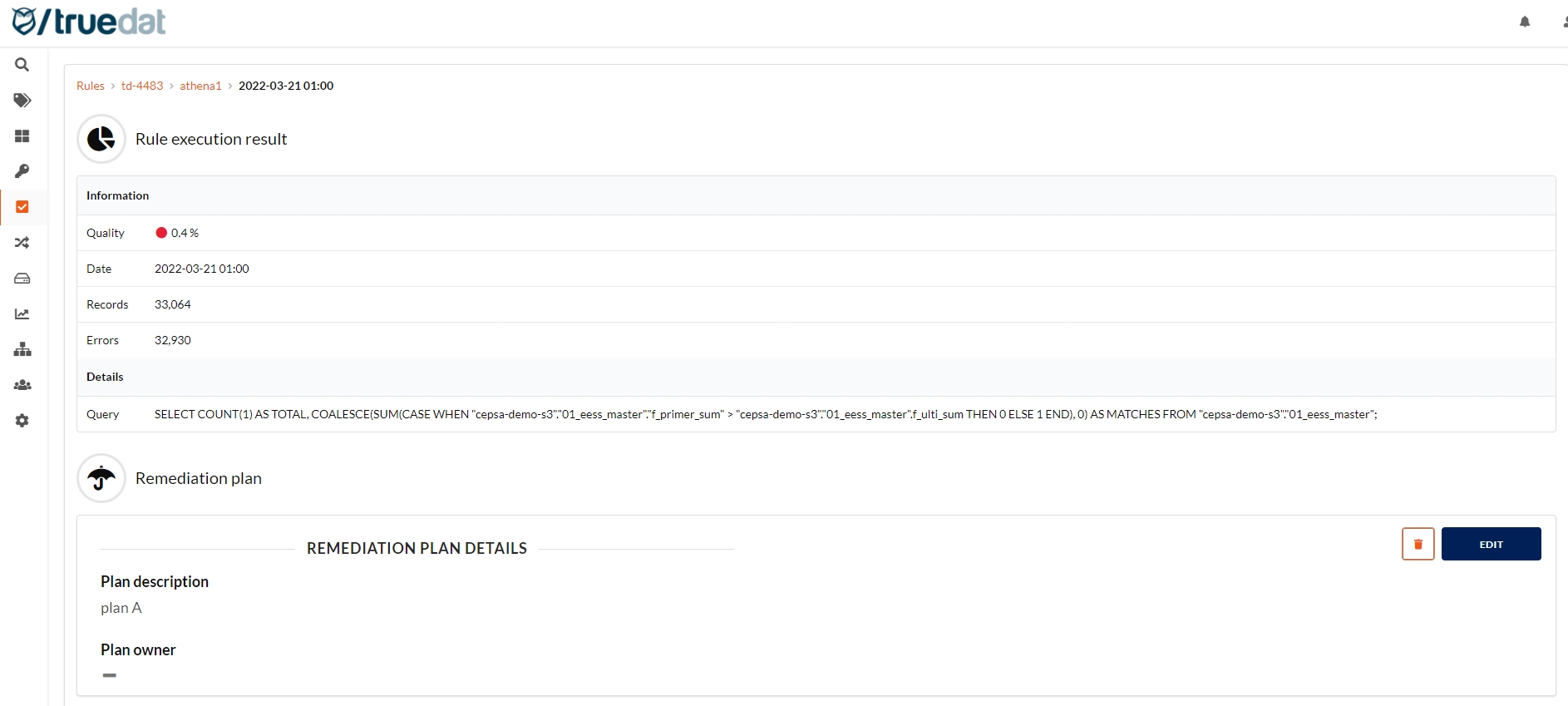

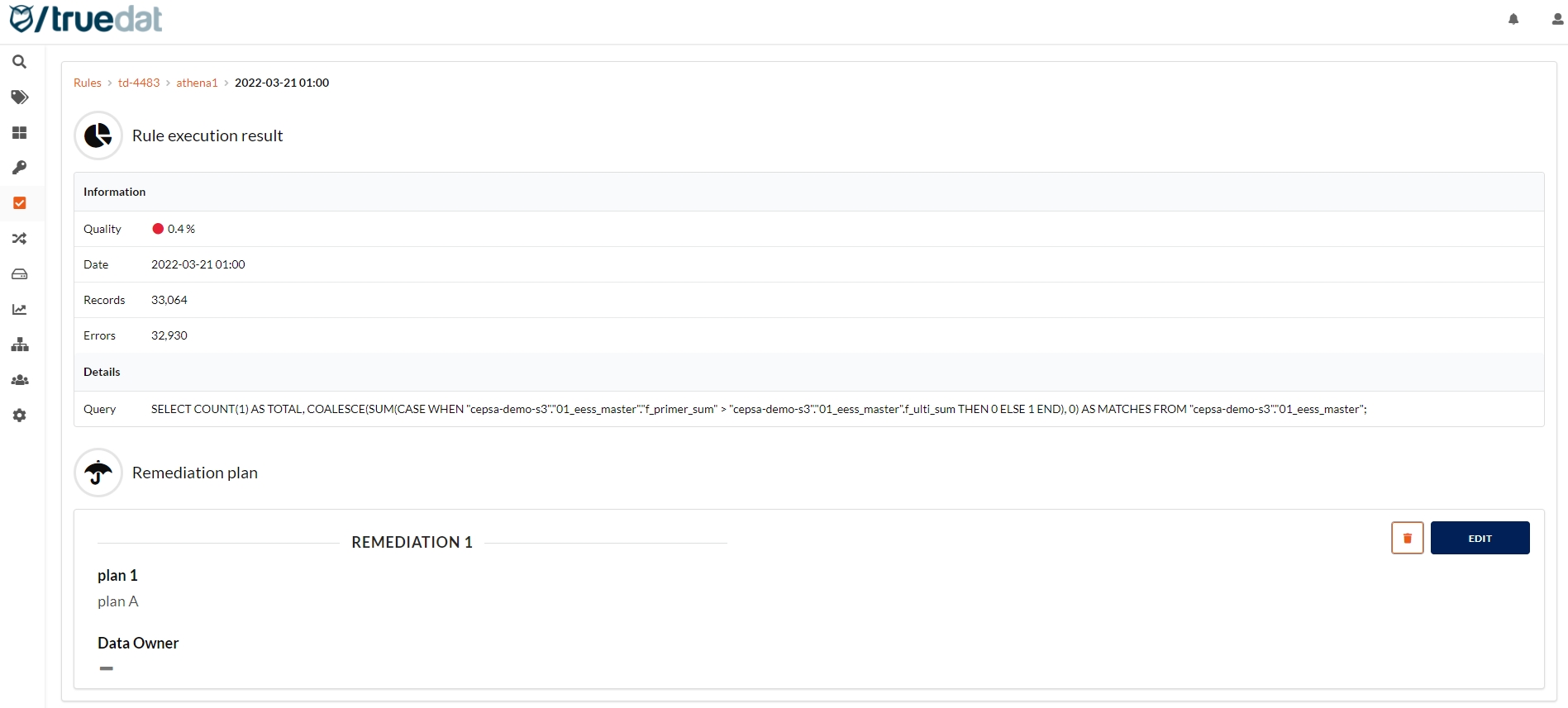

By clicking on the date of the execution you can see the details of that execution. Also, the Details tab shows the information of the last execution.

Admin users will have the ability to delete quality rule results. This shouldn't happen often, but this option can help you eliminate erroneous uploads:

In case that latest execution for an implementation has finished in error, this will be displayed both in the implementations list and in the implementation results tab to allow the user to identify the problem and fix it.

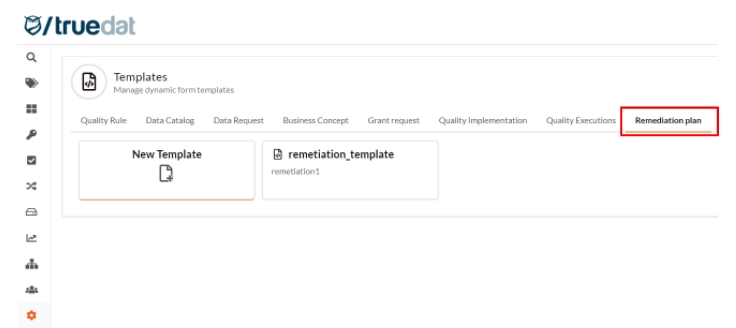

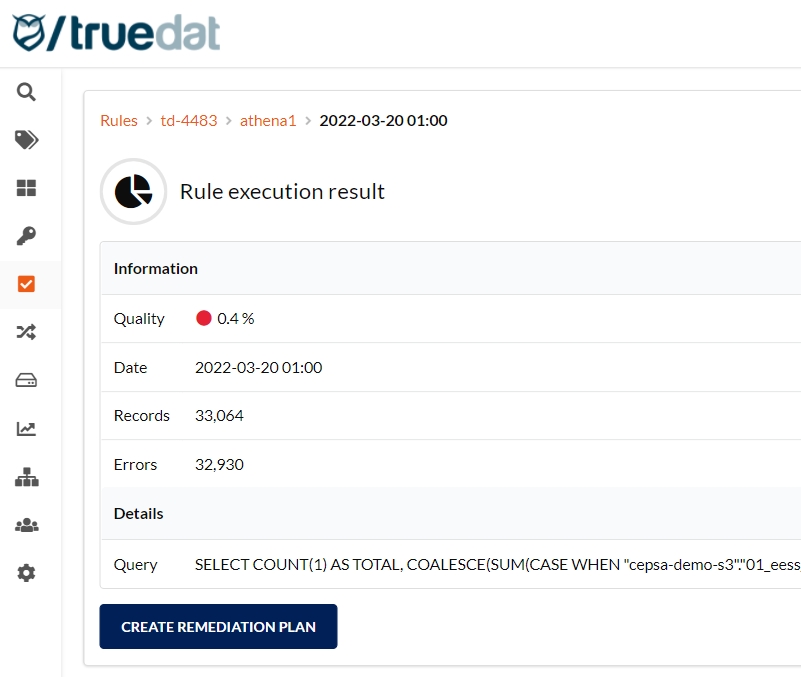

Additional information such as remediation plan details can be recorded linked to the result of an implementation execution. This additional information is managed by a type of template that has to be defined beforehand by an admin user.

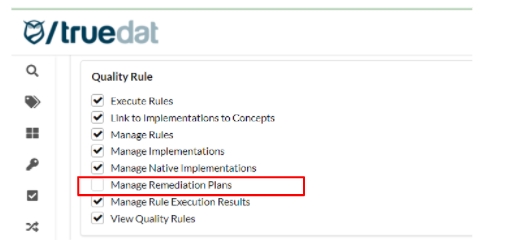

Only roles with the permission "Manage Remediation Plans" can create, edit or delete the remediation plans.

In the details view of the result of an implementation execution you can create a remediation plan that is linked to that result. Click on the 'Create remediation plan' button and fill in the information as defined in the template.

Remediation plans can be edited and deleted by clicking on the relevant button in the "Remediation Plan section". Only those users with the right permission will be able to do so.

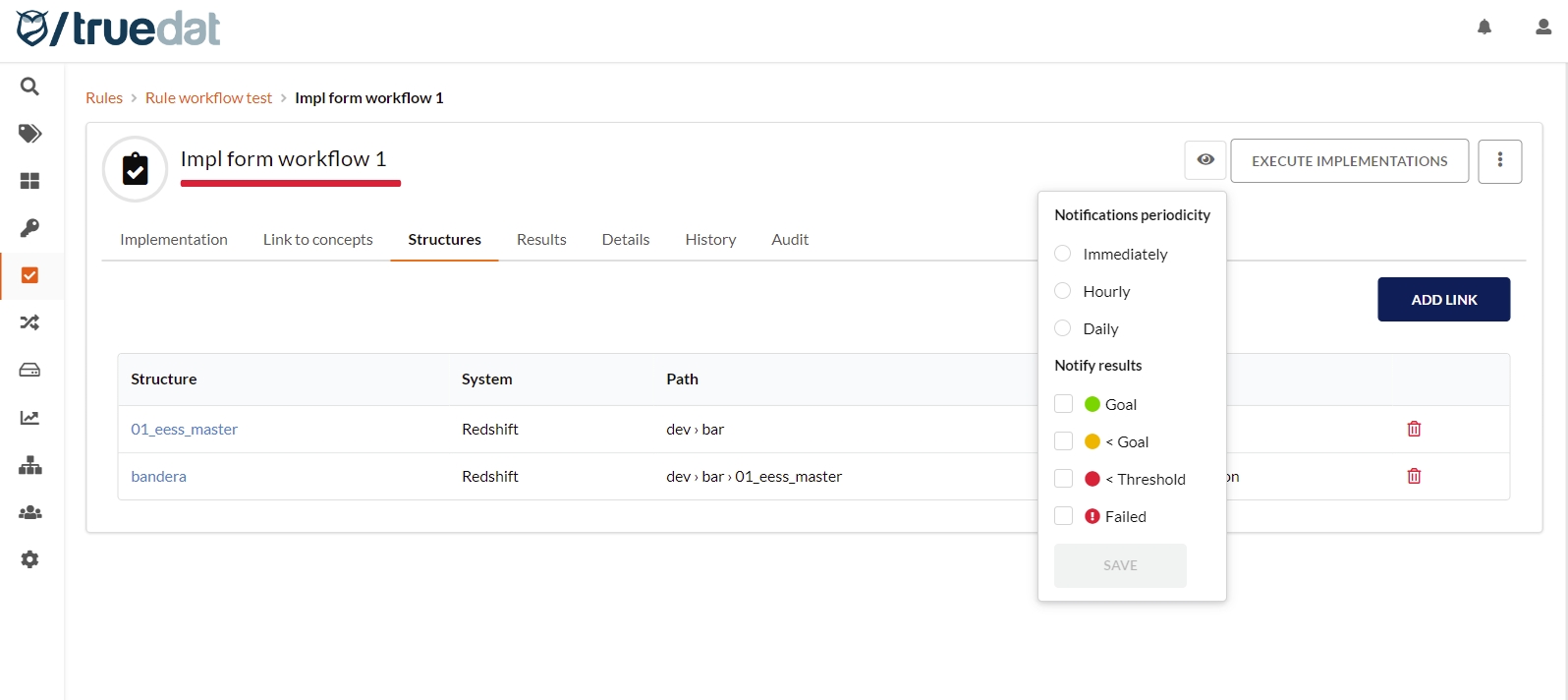

Notifications periodicity:

Immediately: User will receive the notification when the result is received in truedat.

Hourly: Each hour the user will receive an email with all the results received in truedat matching the configuration.

Daily: User will receive an ingest email with all results that match the given criteria.

Notify results: User will select what type of results are to be sent.

Goal: Results that are above the goal

< Goal: Results that are between threshold and goal

< Threshold: Results that are bellow the threshold

Failed: When the execution fails and no result is produced.

Bulk upload from a csv file: You can as well load the information from a csv file. In the Data Quality Rules main screen, click on the button on the right-hand side . The file format will depend on the template you may have defined for your quality rules in your installation.

Using the available operators, the conditions to be applied to the validation must be defined. It will be mandatory to define at least one validation to perform. It is possible to include AND and OR conditions. Clicking on will create an AND condition and clicking on will create an OR condition.

If the implementation was defined with drill-down criteria, then you can view the results for each of the segments by clicking on the icon next to the result.

In the Results tab in an implementation, for those results that have a remediation plan, the icon will be shown.

Clicking on the icon will take you to the details of the execution and the remediation plan.

Users can subscribe to quality rules and to quality implementations, clicking on the and and choosing what type of events they want to watch: result below threshold, result between threshold and goal, result over goal or failed execution. If the chosen event happens over the given quality rule/implementation, the user will receive a notification that can be viewed in the bell on the top right and they will also be notified by email.